Alternative data & insights for April - AI cloud workloads, WhatsApp ramp up, Hardware engineers frenzy

This article is sponsored by Revealera.

Revealera is a New York based company that provides data and insights on hiring trends and trending products and skills across the globe.

Analyze the hiring trends for 4,000+ companies and usage trends for 500+ SaaS products

If you are interested in sponsoring the next article, you can DM and reach me on Twitter @RihardJarc

Hi everyone,

Here is the alternative data report and some insights that I found most interesting over the last month:

The Cloud Provider and AI:

Some interesting data that I read in the last month and want to comment on:

Companies starting to go past the »test« phase of AI workloads

Open-source AI models vs. closed models

The future is constrained by the power grid and energy.

Synthetic data is the solution for newer models

Here are some of the highlighted insights:

It finally seems like AI workloads for cloud customers are starting to move from the test phase to production. A current employee at AWS working on AWS SageMaker shares some glimpses of that:

»Companies are starting to invest heavily into any sort of PEST methodology like parameter-efficient fine-tuning, they are already investing a lot into compute, into setting up their own team of data scientists, ML engineers, hardware engineers, and identifying a use case and to eventually optimize on the business metric of that use case. It's definitely here to stay until the end of 2025. I only see the demand growing every single day with new customers since the industry has now standardized into LLM providers who are very heavy on training and all the other customers who are LLM users who will be very heavy on fine-tuning.«

source: AlphaSense

This expert also mentioned the way clients are using open-source AI models and closed-source models, which was interesting to me.

» …they'll take two different models in the market, either a mix of open source and closed source or maybe two to three different open source models, and they'll run fine-tuning on top of their own proprietary data.«

With the release of Llama 3 a few days ago and given its capabilities (being on par or better than GPT4 and some other closed models), this trend might accelerate. The future of LLM models being a commodity and clients mainly paying for infrastructure seems increasingly likely.

Another topic becoming louder and louder is also the constraints & limitations AI will soon face. The constraints are not so much the chips but more energy and the constraints of the power grid. Even Mark Zuckerberg acknowledged this in a podcast just a few days ago as one of the biggest things that will slow down the growth of AI development. Similar thoughts are often shared with other AI experts. A recent one I read was an interview with a former AWS employee who worked in data center planning. He expressed that the right land, combined with power, is a big challenge for data center providers. The problem is also renewables, which are not reliable 24/7. Because of power constraints, he also believes liquid cooling might become a very interesting topic as PUE metrics are significantly better for liquid cooling. With power constraints, you can maximize the use of that power through the lower PUE liquid immersion design.

Nonetheless, hyperscallers are still investing heavily in building new data centers. Here is an interesting chart showing the number of job openings for data center engineers.

In absolute terms, Amazon has the most openings for data center engineers. Still, it is also interesting to notice the significant uptick in data center job openings for Oracle and Google.

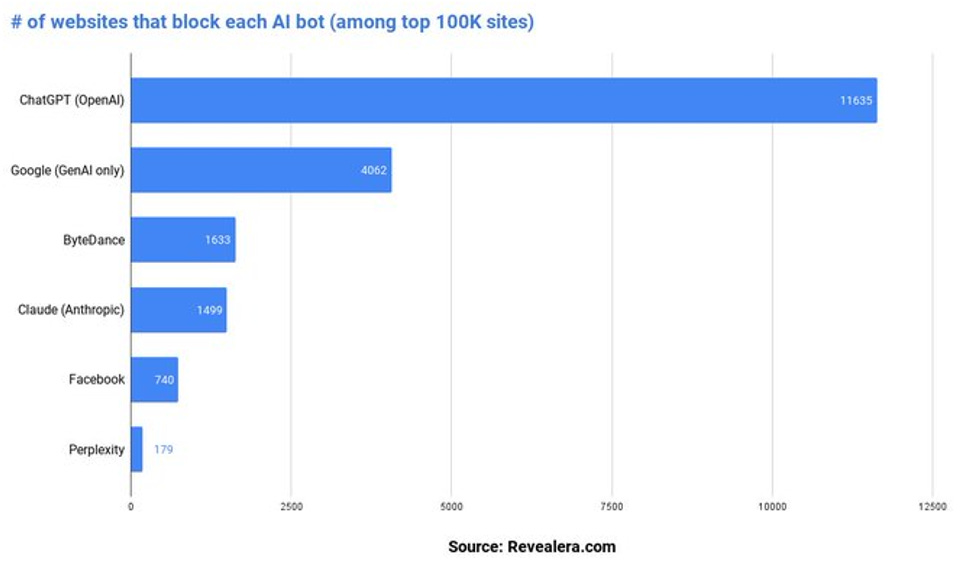

Going back to LLM models, there is an emerging trend of LLMs using Synthetic data for their newer versions. This means that older versions of LLMs are training newer versions of LLMs, and the human input is eliminated or marginal. The reason for doing so is to make more accurate and powerful future models, but at the same time, the issues of IP probably also play a strong factor. The number of websites blocking access to different AI providers is quite telling:

Ad tech

Some interesting data that I read in the last month and want to comment on:

Advertisers’ demand looks good.

Instagram Reels are also performing very well compared to TikTok.

Continued ramp up in WhatsApp growth

Here are some of the highlighted insights:

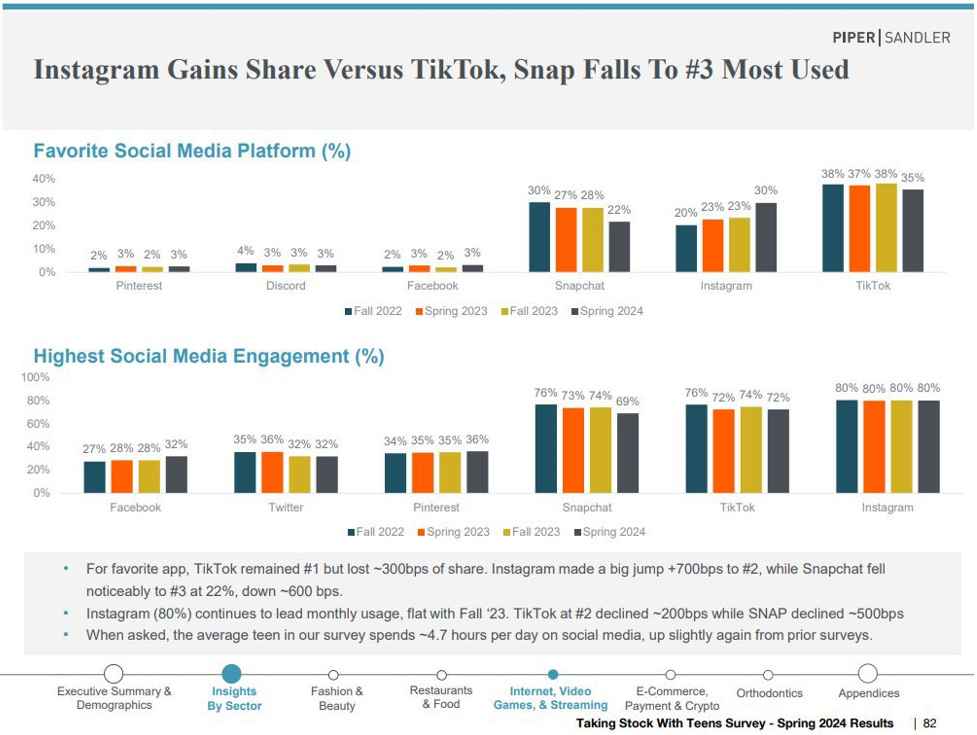

The recent Piper Sandler Teen survey showed a surprising rise in Instagram's popularity for Spring 2024. The number of teens mentioning Instagram as their favorite app jumped to 30%, compared to 20% during the same period last year. On the other hand, Snapchat fell from 30% last year to 22% this year, and TikTok fell from 38% last year to 35% this year.

A similar pattern with an additional monetization uptick was also highlighted in an interview with a Former Meta Director:

»I had a portion of my team that was dedicated to sourcing new Reels content by, in some cases, paying creators to switch over from TikTok to Instagram Reels and make that the platform of their choice. Now in 2024, I think you're seeing a ton of parity around Reels consumption versus TikTok consumption for many key demographic users. You're seeing a significant monetization uptick that Facebook has been able to deploy over the last couple of quarters in making sure that Reels brings in a comparable model of monetization versus what historically was happening on Instagram or Facebook platforms.«

source: AlphaSense

The same expert also acknowledges the point that Meta, because of the iOS signal loss and the need to refocus on AI targeting, has now become even stronger:

» I think Meta has, because of Apple's changes, only become more stronger in their ability to target using proprietary first-party data against all of their products and services. I think AI only enables that further.«

The other thing that was often highlighted through more expert interviews in the past month was WhatsApp and WhatsApp Business, to be more specific. A digital ad expert whose client spent over $120M in Q4 alone on Meta stated that now 20% of his advertisers are using chatbots. In Q4 last year, they spent 4% of their ad budget on WhatsApp Business, up from 1% in Q4 of 22. He also stated that he sees significant opportunity in this growing segment.

The expert also noted strong growth in spending on Meta, up +32% for the quarter, with strength mostly coming from e-commerce and retail (up +53%).

An interesting observation was made by a former Meta employee who had worked there for more than 10 years on the same topic. He specifically also worked on WhatsApp. In this section, he explains well how the culture of WhatsApp inside Meta is very different from how Facebook has done things in the past. The observation that caught my eye was his statement that when he left (2023), the business part of WhatsApp was now more closely linked to Meta's broader organization and that the engineering segment of WhatsApp would probably follow. This may indicate Meta's more active approach to monetizing WhatsApp and having it as their next big revenue and profit driver.

The same expert also noted that WhatsApp was once almost a siloed business within Meta's corporation and that now it is finally getting more resources.

He also noted a very interesting point regarding the challenges of why WhatsApp is lagging in monetization, which can also be because of its encryption. Which limits how much it can use the robust Meta monetization platform:

Semiconductors

Some interesting data that I read in the last month and want to comment on:

Big tech is getting ready for the AI fight with custom silicon.

Expectations of new AI chips powering the next big cycle of smartphone upgrades

Almost every expert you talk to sees Nvidia's CUDA as the critical edge

Here are some of the highlighted insights:

When it comes to semiconductors, we can't get past the topic of almost every big tech company developing their own custom silicon. The main reason for doing this is to have their AI fate in their own hands instead of in the hands of Nvidia.

Data further acknowledges this point as the number of job openings for hardware engineers has skyrocketed compared to software engineers. Also, an exciting trend is that it is the first time since it has significantly diverged from software engineers.

A prevailing theme shared amongst many is that because of the upcoming GenAI and GenAI smartphone apps, we will see a big smartphone upgrade cycle driven by the need for smartphone AI chips.

Nvidia's CUDA continues to be a vital topic in almost every discussion with a semiconductor expert. A former Nvidia employee shared additional points to the already known topic that I found interesting. One of them was the advantages of using Nvidia infrastructure for customers applying AI models. Both data centers and on-device edge compute can run on an Nvidia infrastructure and software stack, making it easier to use AI models across both data centers and the edge vs. a custom workload-specific silicon.

Super excited as earnings are right around the corner and with it new data to digest.

Until next time, take care.

As always, If you liked the article and found it informative, I would appreciate sharing it.

Disclaimer:

I own Amazon (AMZN), Meta (META), Microsoft (MSFT), and Oracle (ORCL) stock.

Nothing contained in this website and newsletter should be understood as investment or financial advice. All investment strategies and investments involve the risk of loss. Past performance does not guarantee future results. Everything written and expressed in this newsletter is only the writer's opinion and should not be considered investment advice. Before investing in anything, know your risk profile and if needed, consult a professional. Nothing on this site should ever be considered advice, research, or an invitation to buy or sell any securities.

Richard, are you at all concerned that as the open source models get more capable it may siphon some demand from openAI and hence azure? The alt data is already showing some increase in claude 3 usage at the expense of openAI