Amazon Trainium: Scaling AI Without Breaking the Bank

Hey everyone,

In this article, I am publishing a comprehensive deep dive into Amazon’s custom ASIC chip, Trainium. I will cover the technical details, as well as the performance, costs, and strategic factors of this unit, and what they mean for Amazon and the broader semiconductor ecosystem.

Topics covered:

How Amazon’s custom chip businesses started

How does Trainium work

The software optimization layer

Performance of Trainium

Why is Trainium this cheap?

The biggest thing holding Trainium back

AWS’s Trainium Business Strategy and Competitive Positioning

Let’s dive into it.

How Amazon’s custom chip businesses started

Amazon Web Services (AWS) entered the custom silicon development after acquiring the Israeli chip startup Annapurna Labs in 2015. This acquisition paved the way for AWS’s in-house chips, such as the Graviton CPU family and Nitro virtualization cards, and later for its machine learning accelerators. AWS’s machine learning chips comprise Inferentia (for ML inference) and Trainium (for large-scale model training), names which directly reflect their intended use cases. AWS’s recent Trainium generation, both 2 and 3, can handle high-end inference use cases in addition to training.

The first-generation AWS Trainium was unveiled at re:Invent 2020 as Amazon’s first in-house training accelerator. Built on a 7 nm process with roughly 55 billion transistors, Trainium1 began powering EC2 Trn1 instances by 2022. AWS then launched Trainium2 (second generation) in late 2023, fabricated at 5 nm and featuring a new NeuronCore-v3 architecture. Trainium2 dramatically scaled up the core count – quadrupling the number of compute cores per chip – and introduced support for structured sparsity, achieving about 3.5× higher throughput than Trainium1 despite slightly lower per-core frequencies.

By early 2024, Trainium2 was available via EC2 Trn2 instances and UltraServer systems, delivering 30–40% better price-performance than contemporary GPU-based instances (such as Nvidia A100/H100 instances) according to AWS.

Most recently, at AWS re:Invent 2025, Amazon announced Trainium3, its third-generation AI training chip built on an advanced 3 nm node. The new chip powers the EC2 Trn3 UltraServer – a 144-chip rack-scale system – and offers up to 4.4× more compute performance, ~4× higher energy efficiency, and nearly 4× more memory bandwidth compared to the prior Trainium2 generation.

How does Trainium work

Under the hood, Trainium chips are highly specialized ASICs focused on matrix math and parallelism. Each chip contains multiple NeuronCores, AWS’s term for its AI-optimized compute cores. Notably, starting with Trainium, Annapurna Labs added dedicated Collective Communication cores alongside the scalar, vector, and tensor engines in each NeuronCore (a good technical detail of this was written on The Next Platform). These communication engines accelerate distributed training operations (e.g., all-reduce for gradients), reflecting a “system-first” design that tightly couples compute with networking. As one AWS architect explained, they »first designed the full system and [worked] backwards... to specify the most optimal chip« rather than treating the chip in isolation. This co-design philosophy (developing silicon alongside software and systems) enables AWS to tailor Trainium’s architecture to improve large-scale training efficiency.

Data Types and Throughput:

Trainium supports a range of numeric formats commonly used in AI: FP32, BF16/FP16, and, notably, a configurable FP8 format designed to boost throughput.

It is essential to understand the term FP (Floating Points) because, later in the article, we will compare the performance of Nvidia’s Blackwell, Trainium3, and Google’s TPUv7 for specific FPs. For less technical readers, FPs are the »resolution« of AI math. Just as a 4K video requires more data and a faster internet connection than a 720p video, FP32 requires more power and time than FP4. By moving to lower FP formats, chip makers are effectively reducing the ‘data weight’ of AI, allowing it to run faster with less electricity. Still, the trade-off can be lower accuracy (increased risk of errors).

Trainium2 and later chips also implement 4:1 structured sparsity (i.e., the ability to skip 4 out of 16 or similar patterns of weights) to exploit model sparsity for additional speedups. According to analysis by The Register, Trainium3’s hardware can leverage 16:4 sparsity to quadruple effective throughput on supported workloads. This means a single Trainium3 chip, which delivers about 2.5 petaFLOPS of dense FP8 performance, can exceed 10 petaFLOPS effective throughput on sparse models. For higher-precision tasks (such as BF16 training), Trainium still offers competitive performance while focusing on FP8/FP16 for maximum speed where acceptable.

Memory and Interconnect:

Each Trainium generation has pushed memory limits to handle ever-larger models. Trainium2 packed 16 GB HBM stacks (HBM3) per chip (total ~96 GB/chip), whereas Trainium3 uses faster HBM3E with 12‐high stacks, giving 144 GB per chip at 4.9 TB/s bandwidth. This nearly 50% increase in memory capacity (and a ~70% increase in bandwidth) enables Trainium3 to feed its compute units efficiently for training massive models. AWS also engineered a proprietary high-speed interconnect called NeuronLink (chip-to-chip links) and a switching fabric (NeuronSwitch) for scaling out. For networking, they also opened the table for other options as they want to optimize for maximum efficiency and vendor flexibility, even on the networking layer.

Trainium2-based systems used a 3D torus topology. Trainium3 introduces an all-to-all switched fabric with NeuronSwitch-v1, which roughly doubles intra-node bandwidth and reduces latency between chips. Thanks to this fabric, a single Trn3 UltraServer can unite 144 Trainium3 chips into a single coherent system, and AWS’s UltraCluster 3.0 can further connect “up to 1 million Trainium chips” across multiple racks to scale the cluster. In testing, AWS reported that these improvements enable 4× faster model training and inference latency reduction when comparing Trainium3 UltraServers to the previous generation.

The software optimization layer

As most of you know by now, any software optimization layer not called CUDA has its hurdles. AWS has tried to mitigate this by integrating its Neuron SDK with popular ML frameworks (TensorFlow, PyTorch, JAX, Hugging Face libraries, etc.) to ease porting. Given recent moves, AWS is increasingly leaning into opening up the software ecosystem to the open-source community and accelerating adoption. Anthropic is key to maturing the Neuron software stack for broader external adoption. A high-ranking Amazon employee made an interesting comment regarding the strategy here:

»To answer your question, for us in five years, we hope on inference size we can at least address more than 50% of the pure play external customers. That’s the reason we are trying so hard to attract those leading companies or investing leading companies like Anthropic to work on accelerator because they are invest by Google and us. They are training their model on both TPUs and Trainium.

I think, basically, they are the trailblazer for all other external customers. Once they test out everything, they develop all the SDKs, those things then future other customer adoption will come in the next five years. This, I think, our conviction. I think this will be a great success going forward. This is what we think.«

source: AlphaSense

So Amazon is betting heavily on Anthropic and its engineers, who have become highly proficient in optimizing Trainium to help build the software library base for broader adoption of Trainium chips. Having Anthropic on top of embracing the open-source ecosystem seems like a clever approach.

Given that CUDA is entrenched in engineers’ mindshare, this strategy seems the only viable option within the software stack, given a full embrace of the open-source community.

Performance of Trainium

Trainium3 chip provides ~2.5 PFLOPS (10 PFLOPS sparse) and 144 GB memory. Hence, a fully populated UltraServer delivers on the order of 360 PFLOPS (dense FP8) or more than 1.4 exaFLOPS (with sparsity) of compute and over 700 TB/s of aggregate memory bandwidth. This puts Trainium3 UltraServer in the same class as the largest GPU-based systems.

According to AWS, early customers have reported substantial performance and cost benefits. For instance, Amazon’s Bedrock service (which offers foundation models) is already running production workloads on Trainium3, and others, such as Anthropic, have achieved 50% cost reductions and multi-fold throughput gains by switching from GPUs to Trainium hardware. This claim is from AWS, so take it with a grain of salt, as we don’t know on what specific workloads these numbers were tested on.

The real number we are looking for is the total cost of ownership (TCO) per performance.

Before we go to Trainium 3 (Trn3), I did find some interesting information on Trainium 2:

An Amazon employee in May mentioned the following:

» We offer as a price per FLOPS. It’s probably 30%-40% cheaper equivalent to their leading NVIDIA instance in our data center. The Trainium2 is about 30% cheaper than upper H200. We sell those. We incentivize customers to use it. Our cost perspective, because NVIDIA enjoys such a hefty margin, we all know it.«

source: AlphaSense

Similar takes are found from different customers.

A customer in February noted that cost-conscious startups using TPUs or Trainium can reduce costs to 1/5 of those of NVIDIA clusters if longer, less time-critical training runs are allowed.

In April, an executive provided granular hourly pricing data, reporting that while NVIDIA H100 chips cost approximately $3 per hour per chip (via providers like CoreWeave), Trainium chips were available for roughly $1 per hour. They further noted that AWS offered potential discounts for long-term contracts that could bring the effective price down to $0.50 per hour, representing roughly 1/6 to 1/7 of the cost of an H100.

Another customer mentioned in August that Amazon is offering »massive discounts« on Trainium processors even within their own cloud instances to undercut NVIDIA GPU spot pricing.

A director at Tenstorrent also noted the benefits of ASIC utilization, noting that GPU utilization for training often sits at only 30-40% due to data movement bottlenecks. In contrast, AI accelerators (ASICs) like Trainium can achieve near 100% utilization because they are explicitly architected for these workloads.

Most experts consistently cite a 30-50% cost advantage for Trainium over comparable NVIDIA instances, driven by lower unit costs and aggressive pricing strategies.

Now moving to the performance of Tranium 3. Looking at Trainium3 at FP8 precision, a Trn3 UltraServer is roughly on par with Nvidia’s latest 72-GPU “Blackwell Ultra” system in total throughput. However, at ultra-low precision FP4 for inference, Nvidia’s system still leads by ~3×.

SemiAnalysis also did their numbers on the TCO/performance of Trn3.

Similarly, they found that the TCO per marketed performance Trainium3 is 30% better than GB300 NVL72 on FP8, but on FP4 it is much worse.

What does this mean? To put that in perspective, currently, for training workloads, FP4 is harder to use because “low precision” can cause the model to “diverge” (basically, the AI becomes confused during learning).

However, NVIDIA has recently proven with NVFP4 that you can train with 4-bit precision by using clever scaling. This could potentially reduce training costs by an additional 30–40% over FP8. At least for the next few months, it isn’t expected that the big AI labs will switch to FP4 for training.

In Inference, the story is slightly different, as AI labs are aggressively adopting FP4. FP4 enables massive models (such as a 1-trillion-parameter MoE) to fit within the memory of fewer chips. If a model that used to require 16 chips now fits on 8 chips due to FP4, your cost per token drops by half. No surprise that Amazon has already announced that, for Trainium4, FP4 performance should be 6x that of the Tranium 3.

This data suggests that Tranium3 can be a very good alternative to Nvidia for training workloads if you know how to use the Trainium software stack.

Trainium 3’s operating cost also matters in this calculation, as TRn3 runs at ~1,000W per chip, while Nvidia’s GB300 runs at ~1,400W, so it’s not just about the upfront CapEx.

Why is Trainium this cheap?

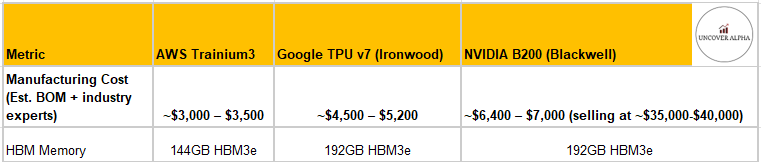

This is a calculation I derived from multiple sources, including BOM and industry expert interviews on the manufacturing costs of Trainium3, TPUv7, and Nvidia B200:

A Trainium 3 chip is half the price of an Nvidia B200 when looking at the pure manufacturing cost, but looking at the price that Amazon would have to pay, which includes the Nvidia margin ($35k-$40k), the difference is staggering, and the reason why the Trainium has the TCO/performance advantage starts to make sense. While Amazon also applies a margin to those costs for external clients, it is nowhere close to Nvidia’s margin. These estimates for the costs are somewhat confirmed by an Amazon executive’s comment back in May:

»Typically, in our internal chip, without considering all these R&D investments, because that’s going to be spread over chip, just from a manufacturing cost perspective, our Trainium chips are typically 1/3 cheaper than NVIDIA. Of course, [half of the] NVIDIA’s price is margined. Just from an acquisition of price perspective, we are around 1/3 of the cost when we buy NVIDIA chip, similar generation.«

source: AlphaSense

Amazon’s significant cost advantage is also a result of how it optimizes chips and manages its supply chain. As an AIchip Technologies (Amazon supplier) employee explained it:

»It’s become so important that I think at this point, Annapurna has a lot of their own design team. They feel they can pretty much do not use a design partner for front-end design, and they can license a high-speed SerDes IP from a third party like Synopsys, Cadence and achieve lower cost. That’s why I think now in the third generation onward, they’re more focusing on the cost instead of the other aspect«

source: AlphaSense

The biggest thing holding Trainium back

The most significant factor holding back Amazon’s Tranium is…