Generative AI - which industries and what companies stand to benefit

Welcome to UncoverAlpha newsletter. The newsletter is primarily focused on deep dives and insights into great companies in the tech and growth sector. The newsletter is free, so if you haven’t, you can Subscribe on the following link.

This article is sponsored by Stream.

I use their platform regularly, and it is one of my main tools for analyzing a company also from a qualitative standpoint. The insights shared by ex-employees, big customers, and competitors about a company are very valuable in addressing the industry trends and path of where a company is going. You will see many of them in this article as well.

Stream by AlphaSense helps investment research teams access unique, high-quality expert insights faster and more cost-effectively than most traditional expert networks.

With Stream, you’ll have instant access to:

20,000+ on-demand transcripts of interviews between experienced buy-side analysts and company experts provide first-hand perspectives on the companies and sectors that matter to you.

The fastest-growing library in the industry, you're bound to find what you need, when you need it!

Insight from experts with 500+ hours of industry interview experience.

If you want to try the platform out, you can use the link below

If you are interested in sponsoring the next article, you can DM and reach me on Twitter @RihardJarc

Hey everyone,

Before we delve into the article, I want to express something I haven't felt in a long time during the process of exploring this topic and crafting this article. It's that profound sense where you recognize something truly groundbreaking that will have significant ramifications for industries, everyday lives, and society as a whole. I genuinely believe we are on the verge of a historic moment in AI development and usability, and it is imperative for every investor to grasp the implications this holds for jobs and various industries.

Now, let's embark on our journey through this article. Here, I will present my findings on the industries and companies that are poised to reap the greatest benefits from the emerging wave of generative AI. Furthermore, I will highlight potential avenues for investors seeking exposure through investments in public companies.

A short briefing on AI

AI, in general, has undergone extensive development over a considerable period. Many of the applications and services we use daily rely on backend AI systems. However, the real pivotal moment for the entire industry occurred in December of last year when OpenAI made waves by launching a free preview of ChatGPT, their new chatbot powered by GPT-3.5. It was one of those perfect instances where technological advancements aligned with a fun and highly adoptable product. This marked the moment when Large Language Models (LLMs) entered the mainstream.

This release represented the first widely exposed version of a chatbot, offering a glimpse of the possibilities with generative AI through a user-friendly interface. In just five days, the preview garnered over a million signups, making it poised to become the fastest-growing consumer application in history. Within two months of its launch, it was projected to amass 100 million monthly active users.

Now, it's important to note that this article does not aim to delve into the complete history of AI and LLMs. Rather, it is crucial to understand that the foundation for all these LLMs was established in 2017 when Google introduced the concept of transformer models in their research paper titled "Attention is all you need."

The transformer model was specifically designed to overcome the limitations of earlier recurrent neural network (RNN) models for sequence processing. RNN models were computationally expensive and struggled to capture long-term dependencies in sequences. In contrast, the transformer model utilizes a self-attention mechanism to assess the relevance of different segments within a sequence, combining them to generate context-aware representations. These representations find application in various downstream Natural Language Processing (NLP) tasks. To this day, transformer models serve as the foundational basis for generative AI. Generative AI refers to an AI system that produces original output not based on pre-existing data or templates. For example, OpenAI utilizes transformer models for ChatGPT, Google for Bard, and many other products in their respective portfolios.

What makes this a historic moment for AI is the fact that we now have a stand-alone AI application that has achieved mainstream recognition. Moreover, from a philosophical standpoint, when engaging with chatbots like Bard or ChatGPT, there are instances where they provide answers that resemble hallucinations. The "computer" goes beyond presenting factual responses and actually generates fictional content. This domain was previously exclusive to humans and often characterized as creative thinking.

The possible effect of this technology on businesses

Let's focus on the positive effects of Generative AI on businesses. We can categorize these effects into a few key areas, although it's important to note that since the technology is still new, these may be early steps:

Increased productivity.

Faster data transmission and synthesis.

More personalized user experience.

Increased idea generation and creativity.

Reduced language barriers.

Other use cases, such as faster fraud detection.

What unites all these points is productivity. With the right implementation of Generative AI, nearly every company can benefit. Initially, the impact is more pronounced in digital professions and businesses.

We can already observe this through reported earnings. For example, during Airbnb's last earnings call, CEO Brian Chesky noted that their developers are 30% more productive by using products like GitHub Copilot, an AI-powered programmer developed by OpenAI.

Furthermore, the impact of Generative AI is significant in customer service, particularly in call centers. Many companies have already announced their plans to integrate well-known Large Language Models (LLMs) with their own product/service data to create "AI assistants." These assistants have the potential to perform at least as well, if not better, than human agents in call centers.

Generative AI goes beyond text generation and proves useful in creating images, pictures, and even videos, disrupting the fields of design and marketing.

Additionally, the automation of repetitive administrative tasks has accelerated with the introduction of LLMs, significantly increasing efficiency.

Software companies specializing in CRM, ERP, accounting, and other areas are already incorporating public LLM capabilities into their products, leveraging their proprietary data to create powerful tools for specific use cases. In the coming months, we can expect a surge of new products in these domains.

In general, well-trained employees can access the information they need more quickly and develop better solutions to problems using Generative AI.

However, there's a catch. Although the capabilities of these LLMs are robust, they have not yet been fully harnessed. The interfaces and products built on these powerful models are not yet plug-and-play for many workers. It requires skill and knowledge to structure prompts effectively and ask the right commands or questions to obtain optimal answers.

While the focus of this article is not on the negative effects of this technology on society, it is important to acknowledge that, like any disruptive technology, there are negative implications for businesses. In the following sections, we will explore these potential negative effects:

Lower barriers to entry for new entrants in many industries.

Companies without a ready internal data infrastructure for AI models will lag behind.

Companies with limited first-party data will face a disadvantage.

Cybersecurity becomes an even greater concern.

Companies that resist embracing AI and resist change will struggle to survive.

Liability issues arise from AI systems producing incorrect answers that lead to harm or damage.

What stands out the most among these points is that with any significant disruption, change is inevitable, and the management of established companies must recognize and adapt to it. Failure to do so will result in competition from AI-embracing companies that produce superior products or services. We are entering an era where data truly holds immense value. Companies with extensive internal first-party data can leverage it to enhance their products, thereby strengthening their moats.

From a philosophical standpoint, as I recently read in an article titled "Why Conscious AI is a Bad, Bad Idea" by cognitive and computer neuroscience professor Anil Seth, we must be aware, even from an investment perspective, of two possibilities:

a) AI may eventually evolve into Artificial General Intelligence (AGI) with genuine consciousness.

b) Humans may come to believe that the answers and solutions generated by AI systems (even if incorrect) are correct, influenced by our own beliefs.

As the professor put it well:

“By wrongly attributing humanlike consciousness to artificial systems, we’ll make unjustified assumptions about how they might behave. Our minds have not evolved to deal with situations like this. If we feel that a machine consciously cares about us, we might put more trust in it than we should. If we feel a machine truly believes what it says, we might be more inclined to take its views more seriously. If we expect an AI system to behave as a conscious human would—according to its apparent goals, desires, and beliefs—we may catastrophically fail to predict what it might do.”

Now from an investment and product standpoint, it is crucial to recognize that due to our human tendencies, there is a risk of unquestioningly accepting the output generated by AI systems as correct. This can lead to increased user stickiness for AI-powered products. However, it also raises concerns about the proliferation of misinformation, as humans may attribute human or god-like qualities to these systems and develop emotional connections with them. From a company standpoint, this poses potential liability issues regarding the output produced by these AI systems, emphasizing the importance of regulatory considerations.

From an investment perspective, who are the possible winners of this new age?

To address this question, I present the following diagram as a summary of my thought process:

1. Cloud computing

The cloud computing sector is one of the biggest beneficiaries of the new AI wave. AI models, in order to run and specially train, need a lot of computing power. This is especially the case for Large AI models, while smaller AI models can run locally. LLMs also mean more consumption for cloud storage as more data needs to be stored and structured on the cloud. All three big three public cloud providers (AWS, Azure, and Google Cloud), besides infrastructure services, already offer SaaS services that will be even more useful with building and using AI models. From half-ready models to services such as text or image recognition etc. Not only may one expect to see more spending on infrastructure services such as storage and computing, but also the more profitable SaaS services of the hyper scalers might get a big uptick in usage as well.

The current Head of AI/ML Strategy at Snowflake recently said in an interview that he estimates that today only 5% of the total cloud bills are related to AI/ML service and should keep climbing fast.

This is further confirmed by a former Google Group Head responsible for AI and Cloud, who thinks we are on the verge of having a market explosion in terms of AI spending. He also estimates the current clients’ AI bills are around the 5%-10% range and should grow from $10B today to $100B in a matter of a few years:

In terms of market sizing, I think that we are about or we are on the verge of having a market explosion. First of all, just because now with the advent of ChatGPT, AI has become commonplace as a household term where people are starting to see the power of what it can really do. I think what makes this problem difficult is that AI is going to be really pervasive across almost every product that you see that's being created today, both on the consumer side and the enterprise side.

you look at the overall public cloud spend right now across the public cloud infrastructure spend, I believe it's somewhere between $200 and $250 billion per year. AI is certainly a small component of that, certainly under 10% or even under 5%. If you use that back of the envelope math just from the public cloud providers, then you're talking about something on the order of $10 billion a year. I think we could see year over year growth that vastly exceeds the growth of the public cloud space. You can easily get to $100 billion, for example, in just a matter of a few years.

Source: Stream

A big benefit for the cloud space is also the fact that in a year or two, we will have a lot of LLM apps built on top of foundation models or even new foundation models. Just looking at the recent batch class from Y combinator, we can see that almost half of the startups are working on some kind of AI product. And these startups are getting funding even in these macro conditions. You can expect all of these startups will be big spenders of cloud hyper scalers with their compute-heavy products and will drive additional revenue growth for the cloud hyper scalers.

Azure

In terms of investments, Microsoft has shown a strong commitment to AI by investing $1 billion in OpenAI in 2019 alongside other investors. They continued their support by participating in a funding round in 2021, where OpenAI sought $100 million. In January 2023, Microsoft made another investment in OpenAI worth $10B. They now have a 49% stake in the company, but in the arrangement get 75% of the profits until it makes back the money on their investment.

With the investments made in OpenAI, Microsoft also secured that Azure is the exclusive cloud provider for OpenAI, which is probably the most important thing for Microsoft. When it’s all said and done, Microsoft will get most of its investment already back in terms of Azure revenue and profits since it is estimated that right now, every 30-word response in ChatGPT costs the company about 1 cent in cloud spend. And this is only to run the model to train new foundation models, which is an ever bigger expense. Estimates are that ChatGPT owner OpenAI spends about $700k per day to run ChatGPT on cloud computing costs of running their models at the scale they are at.

When it comes to Azure, we also have to acknowledge that it is one of the most dominant cloud providers for enterprises. When they start to integrate AI solutions into their workflows and with their data, they are probably the cloud provider who will benefit most from established companies adopting AI solutions.

AWS

AWS is still the biggest cloud provider out there. Although it has recently slowed down in growth more than the other providers, there are different reasons. But the main reason is that AWS dominates the startup space. Startups are great clients when the macro environment is favorable as they are cloud-native companies and VC funding conditions are loose, but when macro turns as it did in 2022, they are the customers that will cut costs first and optimize their spending more vs. the mature enterprises.

When it comes to AI, they are also seen as the ones who seem to be positioned as the weakest out of the three providers. Because Microsoft has OpenAI with GPT, and Google has Bard & PaLM models and their other AI product portfolio suite. But in April, Amazon announced their Foundation AI model API service called Bedrock for generative AI on AWS. While it might seem like “they are late to the party,” that is right now not the case. With the steep increase in usage and boom of these AI products, there is a lack of resources on the computing side. So, even with earlier offerings, Microsoft and Google can’t fill in all the demand that is going on right now. At the same time, because Microsoft owns OpenAI and Google owns Bard, startups that are building similar LLMs might instead go to AWS than use the cloud provider, which is, in essence, their competitor.

Similar happened to AWS when retailers going to the cloud avoided AWS because they were competing with Amazon on the e-commerce side.

GCP

While being the smallest of the three, Google's cloud unit might benefit big from the AI wave. The reason is that, before the LLM hype, Google was known for many AI cloud products, such as Google Vision API. What is interesting about GCP is that a few years ago, they were the cloud provider that wanted to sell a lot of AI solutions and was perceived by large enterprises as the AI cloud provider. But the enterprises didn't take them seriously because they wanted infrastructure services, not AI services. This is confirmed by a former employee - Google Cloud Head, who worked there for 7 years.

When T.K. (Google cloud current head Thomas Kurian) came in, initially, we pivoted from ML/AI and we started focusing more on having an infrastructure, etc., related messaging going to market because what we were hearing was that with larger enterprises, they don't take our sales team seriously.

They think of Google as, "The cool young dudes who are going to turn up in jeans in my office," versus what these enterprises are used to. Hence, when they talk about flashy things like ML and AI, they were just not taking us seriously. We instead started talking about infrastructure and our networking capabilities started expanding data centers a lot more under him and so on. That messaging changed in the initial days.

Source: Stream

Google’s management decided they needed to transition more into a cloud infrastructure provider than the “cool guys who sell AI.” In recent months and in the years to come, ironically, this priority will probably change again to the AI focus. GCP also has the most advanced own chip development from the three cloud providers known as TPUs (Tensor Processing Units), which are supposed to be more cost/effective for AI learning than GPUs (more on that on the Semiconductor segment).

2. Semiconductors

It is also clear that semiconductor companies are a bigger winner of this trend. We already discussed how AI models need a lot of computing power to train; specifically, they need GPUs. This means companies like Nvidia and AMD stand to benefit. We had already seen the first signs of a significant ramp-up of ordering new GPU chips when Nvda reported their latest earnings results. Their guidance suggests a staggering 64% revenue increase YoY in the next quarter driven by increased demand in their data center unit. This is a large number given that, in absolute dollar terms, that means $4B more revenue for Nvidia than analysts' projections for the quarter.

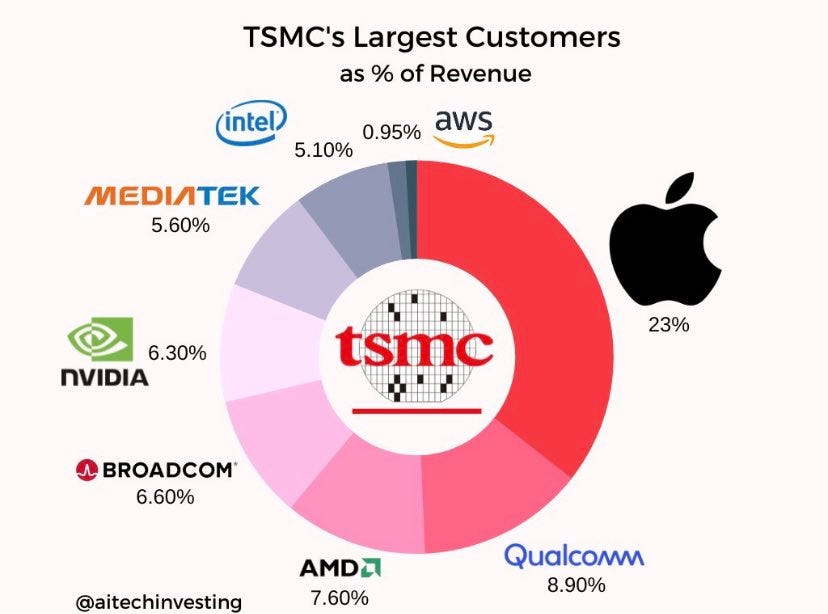

But the semi-space is more complex if we dive deeper into it. Foundries that produce the chips (as Nvidia and AMD are only chip designers) are also big beneficiaries. Taiwan Semiconductor is the leading player in this space, especially when you look at its market share of the more sophisticated chip manufacturing. For example, the increased orders at Nvdia will be translated back down the supply chain to Taiwan Semiconductor. Although looking at their revenue profile by client, Nvidia represents only 6.3%, but together with AMD, the % of revenue rises to 13.6%, and you can expect many new orders to come as AI chip orders.

Equipment providers should also see a boost. ASML, which has a monopoly on the EUV machine, need to produce high-performance chips, is one of them. There are also many others like Applied Materials, Lam Research, etc. But all these plays are less of a pure play given their exposure to other parts of the semiconductor chain like memory chips etc.

But it’s essential to also look at where the puck is going in this industry, not only where it is currently. The AI wave and the high prices associated with these GPUs have only increased the trend that was already present before, and that is big tech companies making their own custom chip design and skipping the “middleman” like Nvidia and AMD and going directly to Foundries with their designs.

Nearly every big tech company is already making its own chips: Apple, Google, Amazon, Meta even Microsoft.

As we already mentioned, one of the primary reasons is the cost, but there are also other benefits if you make custom chips for your data centers. Better performance metrics because it is more custom build for your data centers. The performance metrics can be either compute power or what seems to be now more the case, less energy consumption and better cost profile.

The whole mantra of the industry for the next 5 years will be how to get the costs associated with query running of AI models to come substantially down so that business models become profitable. A former Product head at Google AI said in an interview that if you replaced Google search as it is today with the type of search that is done on an LLM chatbot, the costs of running that would be 100x-200x higher than what Google pays today for the classical query search. The industry’s big push is to make the chips cost-effective to scale the AI models and still have profitable business models.

With that in mind, with the Big Tech players skipping design companies like Nvidia and AMD, they are partnering with companies that sell chip designs rather than the final chips. The market leader in this field is ARM Holding. Softbank’s vision fund owns Arm Holding, but an IPO process is very near. In 2019, Nvidia announced buying ARM holding for $40B, but the regulators didn’t approve it because of anti-trust risk. In a way, Nvidia also saw what could be their risk and wanted to “hedge” but wasn’t successful (similar to what Adobe recently tried to do with Figma). The companies in a similar category to Arm are two already public companies, Synopsys and Cadence Design System. However, they are smaller and have a smaller market share than ARM but still have exposure to this segment.

The problem with relying exclusively on GPU providers is also the fact that custom chips might also turn out to be a better alternative for AI training. Google developed a Tensor Processing Unit chip (TPU), which might be a much better suit for AI than GPUs. As A former VP at Google Cloud explains it well:

“GPU, they call them SIMD processors. It's a single instruction. You work on a bunch of pieces of data in parallel. What they wind up doing is a lot of machine learning and AI is working on matrices. Typically, you're doing a lot of matrix multiplication.

Now, it turns out GPUs are really good for what you call vector operations because you feed the same multiply operation across a whole bunch of rows and columns to make it happen. Now the difference between a GPU and a TPU, which Google built and has gone on to, is the fact that the basic parameter that a TPU operates on is a matrix. It's not a vector. You could actually say, "Go multiply these two matrices. This is the size," and it will go do it for you, whereas for a GPU, you literally have to multiply the rows by the columns and give it the instructions to do the multiplications.”

A GPU has a lot of places where you have to store the intermediate result. Therein lies the inherent advantage of a TPU or a GPU. There's less movement to memory and there's less operations overall. Inherently, what you're going to find is from an architecture point of view, TPU is more better suited to multiply matrices than a GPU which all it does is multiply vectors and a matrix is an array of vectors.

The performance advantage and the power advantage is definitely going to be on the side of TPUs. There's another side to it which is very, very important. Over time, I still expect the entire market to shift to TPUs. Why do I say that? There's two things. Maybe I should be circumspect in what I'm saying. If you take these large language models or pretty much any machine learning workload, the more data you dump at it, the better it is. That's why these guys are talking about 100 billion parameters. PaML is like 500 billion parameters and that growth is like growing at 30%, 40% a year.

Now the silicon roadmap is growing at the roadmap it does. If look you look at if you have a high growth rate in terms of demand, what is going to fulfill the demand in a better way, it is going to be a TPU. Now you flip around and ask yourself, "Okay. How many instances of this model that gets fed trillions of pieces of data do I need?" The answer becomes different, "Oh, you only need one or a few of them." You also ask yourself how many enterprises exist that have their own proprietary data that they're not willing to share with anybody else and they also want models and they also want to train it. You get a lot more.”

Source: Stream

However, Nvidia will still put up a fight with its GPUs and the progression there. According to Nvidia, the training needs for transformer models will increase 275-fold every two years compared to 8-fold for other models. The recent Nvidia chip - H100 GPU with its Transformer Engine supports the FP8 format to speed up training to support trillion-parameter models. This leads to transformer models that go from taking 5 days to train to only taking 19 hours to train.

3. Owners of LLM models

Owners of LLM models are also an area where investors are focused on finding opportunities. Companies such as Microsoft (owns 49% of OpenAI- GPT4) and Google (owns Bard -PaLM). After diving deep into the space, this part is the least exciting in terms of owning the owners of LLM models to benefit the AI wave. While this doesn’t mean that Microsoft or Google won’t benefit, they are probably one of the companies the highest on the list to benefit, but the reasons are different in my view. It lies more in their ability for LLM products to enhance their existing productivity products, their cloud units, and because of their vast data sets and not because they own the LLM models like GPT4 and Bard (PaLM foundation model).

The costs of making your own foundation model are not small, though:

source: https://twitter.com/debarghya_das/status/1656475646053466112

Nonetheless, there is quite a handful of big tech companies and startups that are going to make their own foundation models.

Even if we look at Microsoft, which now owns 49% of OpenAI, which owns ChatGPT, it is hard to say that the "profit center" for this new wave will be in OpenAI because of all the things we already said but also the fact that OpenAI was first non-profit and then in 2019 they transitioning to a "capped-profit" model. Investors in OpenAI have a capped profit on how much they can make on their investments.

Down the road, I see LLM models more as a commodity. Even Sam Altman, CEO of OpenAI, admitted recently that there will probably be more than a thousand LLM models out there. The CEO of OpenAI, Sam Altman, recently said that the training costs for training GPT 4 model were more than $100M. So, having the data is not enough by itself; you also need funding, but the startups in this field are getting funded. A big question that comes to mind is whether you need these big parameter models to get the desired results or are smaller and less expensive ones enough for some use cases. The industry is still developing in this regard. The real advantage will be companies that have their own data that build on top of a foundation model and enhance it and make their own custom model more so than the exclusive owners of foundation models.

The companies that own LLM models are also facing more and more competition from open-source LLM models. A significant event for the open-source community was the leakage of Meta's LLaMa models.

This was recently described by a substack post that published a Leaked internal Google document: Google "We Have No Moat, And Neither Does OpenAI." I would highlight this section from the article but advise someone interested in the topic to read it in full:

Ultimately, OS foundation models might be better than privately owned ones. Even Sam Altman recently said that at one point, OpenAI might open source their models.

When comparing the capabilities of these foundation models like GPT 4, Google PaLM, and Meta’s LLaMA, one measure them by the number of variables they were trained on, also called parameters. The more parameters a foundation model has, the more complex and capable it is supposed to be. Sort of like more parameters means more brain function. But the more parameters, the more costly the training process of the AI model is. Now if we look at the most popular foundation models, the parameter count looks the following:

Meta LLaMA (early release) – 65B parameters

GPT-3 – 175B parameters

Google PaLM (powers Bard) – 540B parameters

GPT-4 – estimates to be around 1T- parameters

Google Gemini (Unicorn) – supposed to be on pair with GPT-4 or even closed o GPT-5

But as we said before, bigger doesn’t mean better; sometimes bigger is too costly and less accessible.

4. Companies with data

One of the more exciting investment fields is companies that have proprietary big internal data in their sub-industry that can now leverage them with public LLMs to make an enhanced version of their product. This doesn't only mean that the companies improve their existing products but create new ones in the process.

When it comes to this segment, there are quite a lot of companies that fit this bill. But as we said before, bigger doesn't mean better; sometimes bigger is too costly and less accessible.

1.1. Productivity tools

The first and most obvious one that comes to mind is the so-called productivity tools. Some of the biggest beneficiaries are Microsoft 365 tolls and Google Workspace tools, as LLMs make it much easier to create, edit and write on these tools. In this bracket, I would also add tools from Adobe. We can already see Adobe’s Generative Fill feature that gives you a glimpse of what I am talking about:

Just a more effortless, faster, and more enhanced user experience for everyone that uses these tools.

Another subsegment in the productivity tools are also ERP/CRMs and other B2B system providers. One that came to me during research is the accounting software owner Intuit. With internal data, they can help their clients with faster bookkeeping and tax filing (they own TurboTax). On the one hand, this can enhance and improve their existing product, but it also offers them a new revenue stream for auto tax filling, which many companies would gladly pay for.

In this segment, while generative AI enhances the “old” products, companies must watch out for new competition that will emerge if they don’t adapt fast enough features in the old products so the users don’t go hunting for other products that fill that need and save them time. The barriers to entry have fallen slightly with this significant tech shift.

4.2 Social Media and Entertainment Companies

One of the bigger categories that fall into this segment are also social media and entertainment media platforms. Social media companies have enormous amounts of data. Suppose you look at Meta’s Facebook, for example. You now have Facebook groups for almost every category. From planning a wedding in a specific country to a barbecue to taking care of your garden etc., These groups already have a segregated knowledge base based on interest and activity, which can be very useful when building an AI model for a specific topic/activity. Not to mention LLM can be used to leverage Customer Service on WhatsApp, making digital AI chat assistants for companies that do commerce on WhatsApp. Meta can create a service on top of this and charge these companies a few cents when the AI assistant answers questions.

Another significant benefit for the social media company is leveraging that data to make custom person-fitted ads. A former researcher working at Meta explained it well a few days ago in an expert interview:

“It's not too hard to imagine in the future Meta, Google, etc., on their ad networks, they might be using generative AI to generate content that specifically targets a particular user or a group of users. I think the content generation, from a monetization perspective, I believe it will allow a much greater level of targeting. I think it's going to become very ubiquitous.

Yeah. I was leaning more that the actual content of the ad could be generated to target a person's interests, their geographic location, the age, etc. Being able to control those parameters, I think they'll be able to tweak ads to make them more persuasive. For example, if you have someone in the ad, imagine a video ad, they talk more like you, they look more like you, you can understand how that would make a more persuasive ad, and that could be done literally for anyone.”

Source: Stream

With this kind of ads, we can expect a much higher conversion rate on ads and, with it, higher ad prices and even more ad dollars per user for these companies, as ad effectiveness will go way up. It also cuts out the middleman, the ad agencies that before were doing consulting on the type of ads, format, content, design, video making, etc. It means Meta and social media companies can, at scale, create personalized ad agencies for their business clients to target users. With this extra boost in the effectiveness of ads, traditional media ads become even less relevant for advertisers, so AdTech takes a bigger chunk of the whole ad market.

A few days ago, Mark explained where Meta is looking when applying generative AI in their products on the Lex Fridman podcast (we already mentioned many of them):

AI Assistant in WhatsApp, AI agent on Instagram for creators where their fans/followers can communicate with his “AI self” 24/7, custom AI agent for each small business for customer support and commerce (companies don’t want one “God-like” AI that recommends your competitors’ products). Social assistant for every user (mentioned example that the assistant reminds you of friends’ birthday but also briefs you on what has happened in their lives so far etc.)

Improved ads with generative AI. User-to-user customization, easier to generate ad content from text, photo to video, Meta takes care of visual creation, language translation for different countries, etc. (cutting out the middleman – ad agencies)

Tools for easier editing of photos or videos on Meta’s platforms.

Internally developers and other employees are much more productive with LLM tools.

Another new revenue generation that might come up for social media companies is the “verification” of one’s profile. Companies like Twitter and Meta already released the options to pay to get your public badge as a verified user. Meta even took a step forward and asked people wanting to be verified for their ID so it’s not enough to pay the monthly fee. You can imagine that the content will explode with generative AI and much of that content won’t be human-made but AI-made. At some stage, people will want the option on social media to only filter human-generated content. And the end of the day means, most users get verified with their ID and start paying a monthly fee on the social media platforms, and you will have again more reach as users filter “only human” generated content. With AI-generated content, there will be an abundance of content, so competition for user attention with creators gets even more saturated.

When it comes to entertainment and content companies, the main benefit is the faster and cheaper production of content. From the idea to the execution, AI can drastically improve the process.

When watching a video or a movie, people won’t differentiate between what is AI generated and what is not.

I think, in general, the segment of “Companies with data” is one of the most interesting ones for investors looking for opportunities. The reason is that it is not that obvious at first which companies will fall into this category, so opportunities are not fully priced in the stock prices yet. In general, a company with large amounts of data that are not publicly available and a product/service with a strong distribution channel embedded in consumer or business behavior should be able to leverage this well and even increase its market position.

The future of Generative AI and LLMs

We are really at the early beginnings of what this technology is capable of. We touch on many things already, and the future, as I see it, is companies “hooking onto” these big foundation models and developing custom AI models for their use cases with their internal data.

The other thing is that while the foundation models will get bigger and bigger in parameter size, there is a real market for smaller models that are more cost-effective for some use cases. In their I/O event, Google announced new models based on their Gemini Foundation model. But these models are very different sizes, which makes sense. If you would solve your problem with a smaller parameter model, why not use it and run it locally than access a big model, which is much more costly and less convenient to use in some cases.

The industry will also rapidly evolve in making the AI models less compute-heavy and much cheaper to train both in terms of hardware innovation and software improvements, especially in the training process.

There is also the topic I haven’t touched on today: Artificial General Intelligence (AGI). This concept is growing in popularity in this field: at some stage, we achieve human-like intelligence with these AI models and create an intelligent form of itself. I don’t want to go deeply into this here as I think this kind of topic deserves an article itself, but opinions on this are divided. Some former employees of Google claimed that these AI models went sentinel; others think this is ridiculous. So far, we are not yet at that point in terms of the size of the AI models but also not sure this breakthrough is enough to make AGI. I do think, though, there is a real possibility that, at some point, we do achieve AGI. What that brings is anybody’s guess. The fact is that the gini is out of the bottle and that the technology is now out there. Stopping development would be fullish as we are not the only one in the world capable of developing this.

Diving deep into this topic by doing research and going through tons of expert interviews, I haven’t found a single person who would say that generative AI is only hype and that there are no real use cases for this technology; on the contrary, most industry insiders even say of how magnitudes of order this is bigger than what most people see. Even the co-founder of Google, Sergey Brin, came back from retirement from the company to work on this technology as he says that this is the most exciting technology he has worked on in his life. This feels so different compared to crypto, NFTs, metaverse, etc. I am not saying those things are unreal and have no use cases. Still, I am saying that generative AI and these foundation models are so much more impactful and change almost every industry in business and will affect consumers’ daily lives as the internet did.

As an investor, it is important to acknowledge this trend and look at your portfolio companies and how it will affect them, at the same time, look for opportunities in new companies. There are two fundamental principles to look at here: does your company have a lot of non-public internal data, and the second is management agile and open to changes, or is it against change? As the Greek philosopher Heraclitus once said: “the only constant in life is change.”

Disclaimer:

In the article mentioned stocks I own GOOG, META, AMZN,ABNB, TSM stock.

Nothing contained in this website and newsletter should be understood as investment or financial advice. All investment strategies and investments involve the risk of loss. Past performance does not guarantee future results. Everything written and expressed in this newsletter is only the writer's opinion and should not be considered investment advice. Before investing in anything, know your risk profile and if needed, consult a professional. Nothing on this site should ever be considered advice, research, or an invitation to buy or sell any securities.

Excellent post! 🤘🏼

thank you, appreciate, great take!