July monthly alternative data report: NVDA, AMD, ASICs, AWS, GCP, Azure

Hey everyone,

Posting a monthly alternative data insights report. In this report, I cover two fields:

- The first one is the Nvidia, ASICs, and AMD battle, where I found numerous valuable insights that have shaped some of my future thinking.

- The second section of this report provides insights into the cloud positions of Amazon, Microsoft, and Google before their earnings announcements.

Let's start with the AI infrastructure Nvidia topic.

What is clear from the last few weeks is that the AI infrastructure spending is continuing to ramp up at a rapid pace. We had Meta's CEO, Mark Zuckerberg, talking about investing »hundreds of billions« into AI compute and having the most compute per researcher of any company. Then you had OpenAI's Sam Altman posting on X that by the end of the year, OpenAI will have a million GPUs running, and that the next step for their talents is to figure out how to 100x that. OpenAI also announced an expanded partnership with Oracle for its Stargate data center, where it will have 2 million GPUs. Just yesterday, Elon Musk, who runs xAI, posted about xAI's goal of having 50 million units of H100 equivalent to AI compute within five years. If we look at GPT-4, which was trained on 25,000 A100 Nvidia chips, roughly equivalent to 12,500 H100, the 50M mark indicates how the industry is approaching scale in the future.

Despite this topic being widely covered by many analysts, I have concluded that we are still underestimating the future compute needs of AI and, with it, the infrastructure spending. We are just starting to see the first uses of AI agents with OpenAI's new release, and the compute demands for these agents are significantly greater than those required for information retrieval. (today's version of Search or even LLM prompt).

With that in mind, the positioning of Nvidia versus AMD and custom ASICs developed by hyperscalers such as Amazon, Microsoft, Google, and Meta remains a crucial topic. The most interesting insights that I came across over the month are the following:

A high-ranking employee at Micron, speaking during an expert call, discussed GPU utilization rates. They have increased from the 40% range, where they were just 2-3 years ago, to now being at 70-80%. This is beneficial for both cloud providers and chip providers, as the ROI for cloud providers has improved, and according to him, the payback period for their investment has now improved to 6-9 months. With improved ROI, the cloud providers might feel more comfortable with future chip orders.

A consensus I read over the last month from the most valuable insights is that most agree that while AMD can close the gap to Nvidia in terms of hardware it still lacks in two key areas which is networking/interconnect (picture it as a way for GPUs to communicate with each other and with it forming a big GPU cluster) and on the software side for optimization, so AMD having ROCm and not CUDA.

On the networking and interconnect side, there is some hope with AMD. As a high-ranking former Nvidia employee mentions, the adoption of UALink would help AMD with its scale-out problem. The fact that both Apple and Amazon have joined the UALink consortium is a positive sign for its adoption, despite Broadcom's departure from it. In general, the consensus is also that most big clients want to have more options than just Nvidia.

»There is a strong desire to develop alternatives to Nvidia. There isn't a customer in the world that would not leap at a viable alternative to Nvidia.«

High-ranking Dell employee (source AlphaSense)

For AMD, on the inference workload side, there is a chance; however, most agree that it ultimately comes down to the stability of ROCm. In the past, I have already written about ROCm's problems, including its instability and the ROCm to CUDA converters not fully performing their intended function, where a significant portion of the conversion still requires intervention by kernel engineers, ultimately resulting in a more costly solution than simply purchasing Nvidia's stack. AMD has released an updated version of ROCm, and the first signals from customers look good:

»The word I'm getting now is that with the latest releases of ROCm, they are much more stable and they've worked through a lot of the bugs and stuff….«

High-ranking Dell employee

This was confirmed during a call with a former AI engineer at Nvidia, who mentioned that ROCm has become easier to work with compared to two years ago, but it still lags behind CUDA.

The consensus from these calls is also that ROCm, while it has become more stable, faces the biggest hurdle in the mindshare that CUDA has built over the years. The problem is that companies lack the confidence to build on ROCm because they are uncertain about the availability of skilled personnel with knowledge of ROCm. Nvidia's CUDA has been taught in universities for 15 years, so the entire talent pool and ecosystem have learned to build things on CUDA, which is a significant hurdle to overcome if you are a competitor to Nvidia. Even if you manage to do it, in the best-case scenario, it will probably take years.

The best thing AMD has going currently is that there is high motivation for an alternative, and big clients like the hyperscalers are willing to try things out, as they don't want to end up being dependent on one company having a monopoly on intelligence – Nvidia.

Nvidia's moves with their own cloud and now with Project Lepton are also angering hyperscalers, according to a Dell employee, as Nvidia might divert a significant amount of traffic from the Lepton project to those cloud clients that they prefer more or in which they have an equity interest, such as Coreweave. Nvidia knows that right now, the way they distribute GPUs is key, as companies are willing to work with most cloud providers as long as they have GPU capacity. Nvidia wants to commoditize the cloud industry and shift more of the power to them.

Now turning to custom ASICs. An interesting consensus also among these interviews is that a lot of the experts, despite all that was said, still see ASICs taking a significant market share in the next 3-5 years:

»In 5 years GPU/ASIC in inference 50/50 split, in training 60/40«.

High-ranking Meta employee

A high-ranking Micron employee believes that by 2028, Nvidia's market share will be 50-60%, with the majority of the remaining pie going to hyperscaler custom ASICs.

The consensus is also that the most mature and capable ASIC out there right now is Google's TPUs, followed by Amazon's offering. Some experts even estimate that TPUs already power 30-50% of Google's inference needs.

In summary, based on the data and insights I have recently read, the consensus of industry experts is that AMD's ROCm has become more stable. AMD has started to move in the right direction, but this isn't stopping Nvidia, as its GPUs are still the most sought-after asset. In terms of ASICs, most expect them to take a significant market share in the future years, but this will take time, as apart from Google, many of the custom ASIC development efforts are still in early maturity stages.

If there are no significant architectural changes to the way AI models are trained and run inference on, then ASICs have a better chance of rising in adoption. However, if we start to see major changes, the flexibility of GPUs will make them the only viable option again.

There was an interesting comment from a Meta employee confirming this thinking:

»The world up until now has not had a very good handle on what type of compute was needed for any AI/ML use-case. They were changing so fast that it takes too long to build new silicon….We're finally getting into a space where we all in the industry have a much better idea of what we need to run. While people freak out a little bit because models are constantly changing, we got a much better idea of what data movement looks like in different types of AI models, and that leans us towards wanting something different than a traditional GPU.«

The hyperscalers and the cloud market check

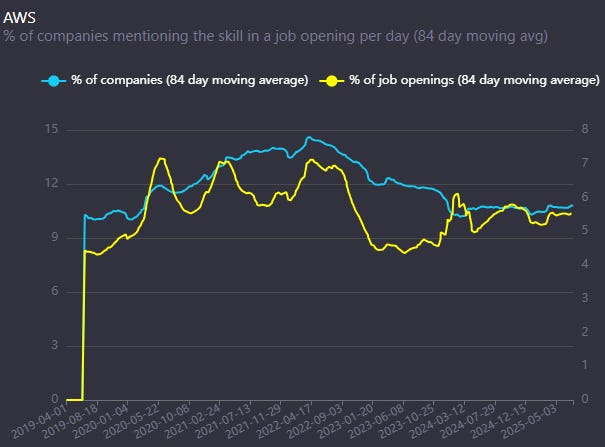

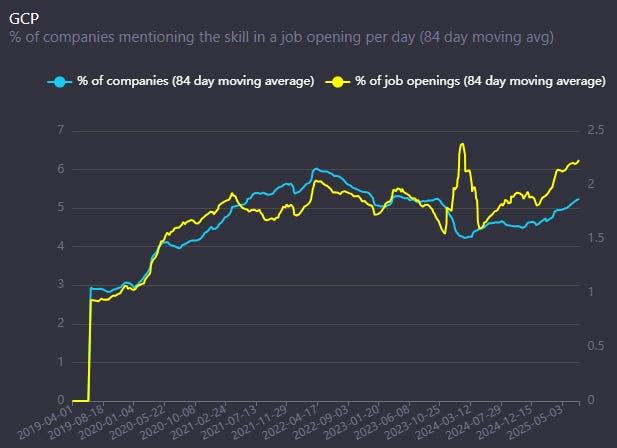

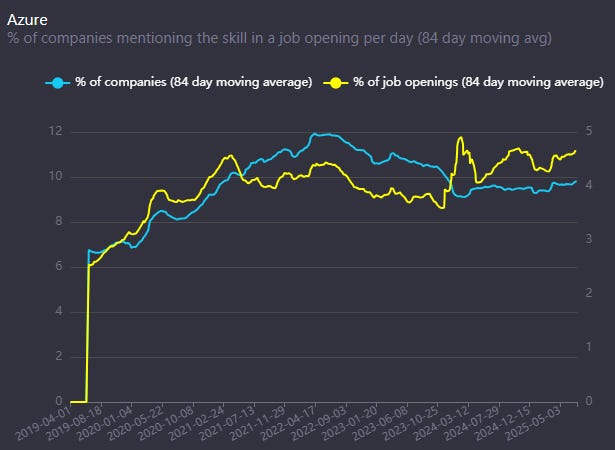

First, an interesting data point is that, upon examining job postings, there is an uptick in jobs and companies seeking engineers who are familiar with GCP. A slight uptick is also seen with Azure, but not with AWS.

Additionally, reviewing analysts' reports and channel checks, the data appears strong for the upcoming earnings reports of the hyperscalers. Here are some comments that recently stood out to me.

On Azure:

RBC Capital Markets:

»Azure core workloads remain stable, Al-related interest is building, and Copilot is starting to show up more frequently in renewal and expansion conversations. Most customers remain in pilot or department-level usage, with internal readiness still limiting broader adoption.«

»Azure demand remains steady; GPU constraints improved late in the quarter.«

»No signs of field-level OpenAl friction. Several noted that OpenAl integration is still a differentiator in enterprise bakeoffs, and Azure continues to win share in GenAl-heavy workloads.«

TD COWEN:

»Azure checks were strong, we're expecting capacity constraints to be easing, and our new bottoms-up model gives us confidence in Azure growth trending well above Street in the qtrs ahead.«

»Separately, 3rd party data we track points to 2Q growth for the Big 3 hyperscalers tracking above year-ago levels for the 2nd qtr in a row, alongside a return to Q/Q growth«

On AWS:

Bernstein:

»AWS is back in focus, and we expect to see top-line acceleration driven by improving performance in core and modest AI-related benefits as GPU supply constraints eased intraquarter.«

Morgan Stanley:

»Expect Larger Anthropic Growth Contribution Ahead: Notably, AWS's growth, excluding our estimated Anthropic inference revenue, has remained healthy (16%-19% over the past 5 quarters)...speaking to the durability of the business even through GPU-related supply constraints that are expected to ease in 2H.«

Truist Securities (on AWS):

»…and we believe an acceleration in Gen-AI related revenue could be on the horizon in the back half of the year«

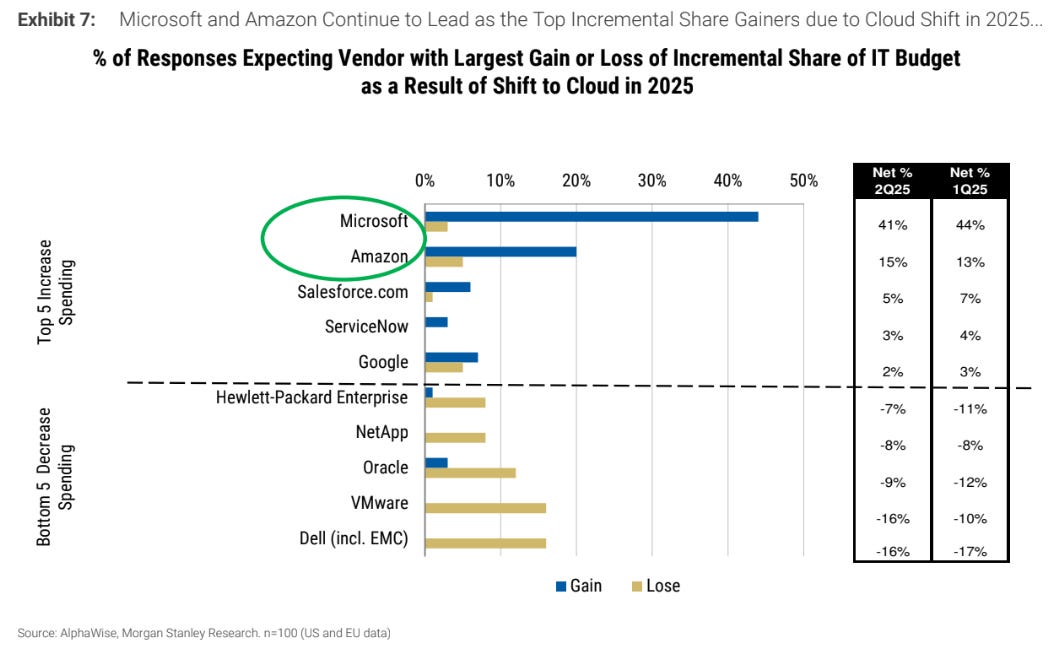

The trend I am observing is that, due to its OpenAI partnership, Microsoft is gaining more market share than its competitors. However, at the same time, the need for GPUs has accelerated the adoption of hybrid cloud environments.

I found an interesting comment from a former Amazon employee on this trend:

»What we saw in 2022 is the average start-up had 1.7, 1.8 cloud, if you looked at the number of cloud providers connected per start-up customer in our start-up customer base. That accelerated to 3.5 clouds, 3.4 clouds entering 2024.«

Alphawise and Morgan Stanley Research confirm this trend, where Azure continues to dominate, although Amazon has made some progress, according to this data, in the last quarter:

Another insightful piece was reading the JPMorgan CIO survey.

The key takeaway is that companies are migrating more towards cloud environments, as AI workloads require specialized compute, power, and cooling, which companies often don't want or can't handle on-premises.

I also saw in various data reports, including this one, that current GPU availability is sufficient, which might indicate that, except for large clients like OpenAI and the AI research labs, other companies have enough compute power to run their current AI workloads. This might also point to still limited usage of AI in real-world cases (outside of the start-up ecosystem).

»CIOs appear to primarily agree that the public cloud providers currently offer sufficient GPU availability to meet their organizations’ AI needs (no GPU shortage among enterprises), and that they will primarily buy AI Agents from SaaS providers, much more so than they plan to build their own custom AI Agents.«

The surprise to me was that there wasn't much talk about Google's GCP from the analysts, which I think might surprise on the positive, with the Gemini models being one of the best performing ones recently, and with Google's internal use of TPUs, giving them the ability to offer more GPUs to their customers.

As always, I hope you found this article valuable. I would appreciate it if you could share it with people you know who might find it interesting. I also invite you to become a paid subscriber, as paid subscribers get additional articles covering both big tech companies in more detail, as well as mid-cap and small-cap companies that I find interesting.

Thank you!

Disclaimer:

I own Meta (META), Google (GOOGL), Amazon (AMZN), Microsoft (MSFT), Nvidia (NVDA), AMD (AMD), and TSMC (TSM) stock.

Nothing contained in this website and newsletter should be understood as investment or financial advice. All investment strategies and investments involve the risk of loss. Past performance does not guarantee future results. Everything written and expressed in this newsletter is only the writer's opinion and should not be considered investment advice. Before investing in anything, know your risk profile and if needed, consult a professional. Nothing on this site should ever be considered advice, research, or an invitation to buy or sell any securities.

With ROCm improving, could mindshare shift from CUDA happen sooner than the market thinks?

Thanks! Enjoyed it - here's my take on the semis with all the recent drama https://open.substack.com/pub/techitalt/p/semis-this-august-trumps-100-tariffs?r=5jmutn&utm_campaign=post&utm_medium=web&showWelcomeOnShare=true