The hyperscaler - LLM provider relationships are starting to heat up

Hi all,

In this article, I will cover the relationships between LLM providers and cloud providers. As once the relationship was relatively straightforward, now it is becoming more and more complex, with each party having their own interest and starting to entwine their interest. It is clear that each side needs the other right now, but let's dive into how this relationship looks and might unfold.

The start

The initial step was established in 2019 when Microsoft invested $1B in OpenAI. OpenAI agreed to use Microsoft's Azure as its exclusive cloud provider as part of the deal. The intention here was obvious: OpenAI needed compute to train its LLM models, and Microsoft got a new customer to use more of their cloud infrastructure services. The relationship later expanded with Microsoft integrating GPT into its product portfolio of Azure AI offering, GitHub Copilot, Office, and other Microsoft products. Microsoft also invested an additional $10B in OpenAI (2023).

On the other side, we have Anthropic. They started their cloud partnership route in 2022 when Google invested $300M for a roughly 10% stake in the company. This partnership also included Anthropic choosing Google Cloud as its primary cloud provider. In early 2023, Google invested an additional $500M, and then, later, in 2023, announced plans to invest up to $2B in Anthropic over multiple years. However in 2023, Google was not the only one courting Anthropic; in 2023, Amazon committed to invest $4B in Anthropic for a minority stake. Initially investing $1.25B in September 2023 and an additional $2.75B in March 2024. The partnership also established AWS as Anthropic's primary cloud provider. Amazon integrated Anthropics LLM – Claude models into Amazon Bedrock, which it then offers to its cloud customers. Anthropic also utilized AWS's custom chips like Trainium and Inferentia for training and inference operations. When it comes to Anthropic, it seems like Google was their first partner, but once Google focused on developing its own LLM models, AWS stepped in as a better partner.

The main reason why LLM providers do these cloud deals is because they need an enormous amount of compute to train their AI models. On the other side, besides getting ownership in these companies, cloud providers also get exclusive cloud deals, which helps their cloud business continue to show growth. For cloud providers, the fact that they don't have to have those significant LLM losses of training and developing new models on their books is an additional benefit as the only thing on their book is the investment, which so far is gaining in value as these AI companies raise new investment rounds with higher valuation, even though they still produce billions of losses.

However, the relationship has become more complex as cloud companies are also becoming more dependent on these companies delivering leading models so that they can resell them to their clients through their cloud AI services and marketplaces. On top of that, cloud providers are integrating these models into their products and services, such as GitHub, Microsoft Copilot, Amazon Q, etc.

At the same time, revenue from API usage of cloud clients has become an important revenue source for AI companies.

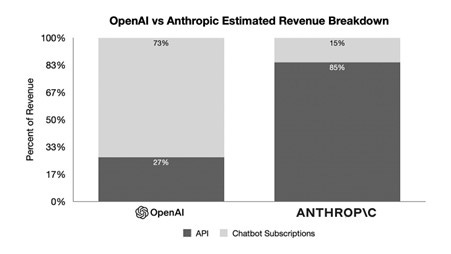

Looking at estimates on the revenue breakdown of OpenAI and Anthropic, the story is quite telling:

OpenAI makes around 73% of their revenue from Chatbot subscriptions and 27% from API services. Anthropic makes 15% from their chatbot subscriptions and 85% from their API services. Similar estimates to the above ones are also from an interview on AlphaSense with an AI industry expert stating that 60-75% of Anthropic revenue comes from third-party APIs (mainly AWS). On the other hand, OpenAI had 72% of its revenue coming from ChatGPT.

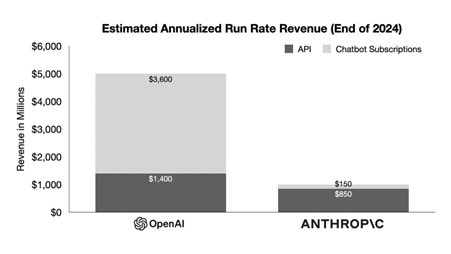

Also, looking at the estimates for revenue size, we can see a big difference: Anthropic is projected to have an ARR of around $1B by the end of 2024, and OpenAI is more in the $5B range.

Most of the API revenue from Anthropic is supposed to come from AWS's Bedrock service.

There are two potential problems with LLM providers being highly dependent on API revenue from cloud providers:

Cloud providers have a revenue share agreement for this API revenue. Because of this the margin profile becomes a lot smaller. Two months ago, The Information reported that Anthropic's gross margin dropped to 38% from the 50-55% range in December 2023. It shows that as cloud companies are taking their share, and as these models are more expensive to train the gross profit margins of LLM providers are much smaller than what you expect from traditional software or SaaS providers (70-90% range).

Anthropic's revenue share with its cloud providers is supposed to be between 25-50%, while OpenAI's revenue share with Microsoft Azure is around 20%.

The second potential problem is that because clients have a relationship with the cloud provider and he resells the LLM via the API, you as a LLM provider are more easily switchable if another LLM alternative comes up. There is a reason why both Microsoft and Amazon are still really encouraged to adopt and offer Meta's open-source Llama. Not to mention that both companies are also developing their own models internally.

As this AI expert said it well:

» As soon as or if there ever is a better model, whether on price, performance, or quality, it's one line of code to switch, maybe slightly more than one line of code, but it's a fairly trivial switch. Unlike consumers, businesses are much more likely to do so on an ROI basis.«

source: Alphasense (get a 14 day free trial and acess the full interview)

However, there is a big difference between OpenAI and Anthropic. The main reason for that difference is ChatGPT's brand power.

Before we continue with the article a word a section for our partner Alphasense

In my research process I use the AlphaSense platform as one of my primary research tools. I made a short video explaining some of the features they provide and how I use the platform:

The advantage that OpenAI has is the ChatGPT BRAND

Because ChatGPT was basically the moment where LLMs became mainstream, it has also propelled the ChatGPT brand. It is also one of the reasons why OpenAI makes more than 70% of its revenue via subscriptions, mainly the ChatGPT subscription.

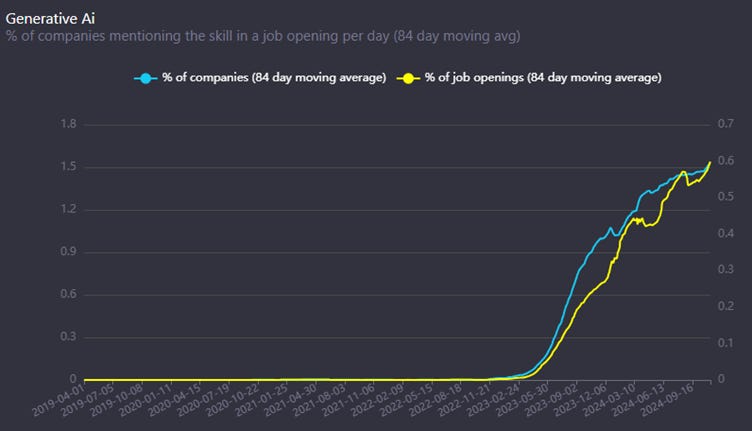

It is interesting to look at data showing job openings.

As expected, job openings mentioning GenAI as a needed skill are steeply rising, but it's worth looking at percentages more closely. We can see that the 84-day moving average in terms of the % of companies mentioning GenAI as a needed skill stands at 1.5% and the % of total job openings mentioning GenAI as a needed skill at 0.6%.

Now, let's look at job openings mentioning ChatGPT as a needed skill:

Looking at the numbers, the moving averages for the % of companies mentioning ChatGPT as a need skill is at 0.3% and the % of total job openings mentioning ChatGPT as a needed skill at 0.11%.

Comparing both data, the brand power of ChatGPT is evident, with requirements for employees knowing ChatGPT being around 20% of the whole GenAI requirement (companies and total job openings).

ChatGPT has become synonymous with using an AI chatbot, in the same way Google became synonymous with search.

OpenAI knows it has the power

It seems that Altman and OpenAI know this »power« very well. There are more and more reports of Altman becoming frustrated with their Microsoft relationship, mainly because OpenAI is not getting enough compute infrastructure to continue growing.

In June, OpenAI announced that it had partnered with Oracle Cloud to increase capacity. It is more than clear to most of us who follow this space that this is not the ideal scenario that Microsoft would want. But for Microsoft, OpenAI partnering with Oracle was probably a preferred choice than if they would partner with AWS or GCP, which Microsoft sees as a more significant thread in the cloud space.

You also can't get away from the feeling that OpenAI feels that they are bigger and their potential is much more immense than just being a Microsoft suite feature/solution.

The Information reported that after OpenAI CEO Sam Altman and Chief Financial Officer Sarah Friar raised $6.6 billion in capital, mainly from a slew of financial firms, they told some employees that OpenAI would play a greater role in lining up data centers and AI chips to develop technology rather than relying solely on Microsoft.

There were also comments saying that Microsoft is not moving fast enough to suppy OpenAI with enough computing power. The fact that competitors such as Anthropic, xAI, and Meta are getting closer is an essential factor in Altman wanting to have more partners that allow them to move fast and scale fast.

OpenAI's ambition to develop their own custom chips (ASICs) is another sign of power. At first, Altman seriously wanted to raise trillions to start their own foundry business. Lately, it was reported that that goal was too ambitious and instead downsized to them »just« moving to design their own chips and not manufacturing.

It is a show of power because it shows Altman's and OpenAI's ambition is through the roof and definitely not limited to the Microsoft environment mentality. It also shows that the days when OpenAI relied exclusively on Microsoft are over. Microsoft itself is developing its own custom chips (ASICs) for AI, and the fact that OpenAI still felt the need to do it by itself shows that they don't have confidence in its partner Microsoft delivering the custom ASICs and delivering the needed infrastructure.

But what about Anthropic?

Given that a high percentage of Anthropic's revenue comes from API usage on cloud platforms (mostly AWS Bedrock), their negotiating power seems to be a bit smaller. Even though they have deals with both AWS and GCP, GCP is trying to push their Gemini models more, which is probably not something that Anthropic wants. There are reports that Anthropic is now looking at its next investment round and that AWS is considering a similar investment to the one before in the range of $4B, but this time, it comes with a slight twist. AWS is asking Anthropic to use a larger number of Amazon's custom-developed chips (ASICs). While the initial $4B deal in 2023 already included some of Amazon's custom chips, they are doubling down on it now. This can be a potential problem for Anthropic as it locks them even more into the AWS ecosystem and gives them even less power in the future.

At the same time, given how OpenAI and xAi are building supercomputer data center clusters and given GCP will surely favor its own models, it seems the smart move for Anthropic would be to »get in bed« with AWS even more and get the infrastructure capacity to be able to compete in this industry.

The cloud providers

The motivation for cloud providers is quite simple. It is to increase the infrastructure usage and be the backbone of the whole AI wave. For them, doing these exclusive deals with LLM providers is beneficial. As I already mentioned before, it increases their cloud top lines while, at the same time, they do not have to carry the losses of building these models. For them, a world where LLMs are a commodity is a dream come true as clients can use them more often, adopt them faster, and have bigger budgets to spend on infrastructure, and at the same time, no LLM provider has strong negotiating power over them.

However, they did realize that, especially for inference AI workloads, the Nvidia chips are too expensive and that providing cheap inference computing will be essential for massive adoption. That is why they are now all in a race to develop their own custom ASICs, which can handle AI inference workloads. Another benefit is that they also reduce the dependence on Nvidia, which is really important to everyone. A former Microsoft Director working on AI (2 levels from C-suite) confirmed this:

»The honest truth with you is we didn't even think about the Athena project, which is the Maia and Cobalt, until we came to that huge world of NVIDIA controls our destiny. That is not what we wanted. Any large hyperscaler would not want that.«

source: Alphasense (get a 14 day free trial and acess the full interview)

They are developing their own LLMs, working with open-source LLM models like Llama from Meta, and developing their own custom ASICs all to align themselves with two main goals: increase the pace of adoption of LLMs with their clients (commoditizing the market helps most) and reduce the dependency when it comes to chips with your custom silicon.

It was interesting reading an interview with a High Ranking Former Google Cloud employee saying:

»Until large language model adoption becomes mainstreeam, I think it's still not mainstream. A lot of people are using it as a side project, but people who are building it, they eventually want to move off of OpenAI and they want to go open source.«

source: Alphasense (get a 14 day free trial and acess the full interview)

Summary

The LLM providers and the cloud providers are dependent on each other. At the same time, in the long run, given how compute-heavy/costly training LLM is and how they are used mainly by enterprises (via cloud APIs), the two functions should merge into one company doing both. It also makes it predictable for both sides. Even though many thought the Microsoft OpenAI investment, with Microsoft having 49% ownership in the for-profit OpenAI entity, was just that, it does not seem like that is fully the case. There is also the question of if/when OpenAI restructures its corporate structure, will Microsoft still keep a strong ownership stake, and with its influence, or will it drift more into the »just partner« sphere? Definitely a thing to watch closely for the coming months.

As always, I hope you found this insightful, and until next time.

Disclaimer:

I own Amazon (AMZN), Meta (META), Microsoft (MSFT) stock.

Nothing contained in this website and newsletter should be understood as investment or financial advice. All investment strategies and investments involve the risk of loss. Past performance does not guarantee future results. Everything written and expressed in this newsletter is only the writer's opinion and should not be considered investment advice. Before investing in anything, know your risk profile and if needed, consult a professional. Nothing on this site should ever be considered advice, research, or an invitation to buy or sell any securities.

clear picture of industry developments.

thanks

Interesting piece. However, this is just a tip of the iceberg. These Foundation model companies will generate trillions ARR within the next decade. API fees and Enterprise AI are not the biggest opportunities in Gen AI

I did a deep dive in this piece “The $3 Trillion AI Opportunity Everyone Missed”

https://open.substack.com/pub/chrisbora/p/the-3-trillion-ai-opportunity-everyone?r=aszci&utm_medium=ios