Hi all,

It has been a year since I wrote my article Generative AI - which industries and what companies stand to benefit, and I decided to share some new findings more than a year later. So, let’s dive in.

The cloud war shifts focus

The cloud industry is one of the main industries to benefit from the rise of GenAI. This was noted in my article from last year, but what wasn't clear to me then was whether clients would start to shift to cloud providers based on the strength of their GenAI offering. Many people, including myself, thought that because of OpenAI's dominance and Microsoft's close relationship with OpenAI, Azure could start to benefit and see shifting workloads from other cloud providers, such as AWS and GCP.

A year later, I now have the answer, and that answer is NO.

The reality is that because of the fast pace of development of other LLM providers, primarily Anthropic, Llama, Google's Gemini, and even some Chinese LLMs, the difference between OpenAI's models and their competitors has shrunk substantially. While OpenAI still has brand name recognition, which often drives customers to try it out, the dynamic that is starting to become a pattern is that cloud clients usually test multiple LLMs before choosing one or more for their specific use case. Because of that, Azure's close relationship with OpenAI isn’t a huge material factor in clients switching between cloud providers. If a client already has an Azure relationship, that is a different thing, but for the ones that don't, there isn’t any evidence pointing to clients switching to Azure. Switching a cloud provider is a very complex and costly task. It is about more than just the costs of migrating your data, which are very high; it is also about your engineers and the engineering team who have knowledge of working with one cloud provider and not the other. It is a heavy lift. So, for that, OpenAI's offering would need to leapfrog better than the competition to justify the switch hassle. And today it is not.

Other factors I view right now as more impactful in clients' decision-making process regarding the cloud provider for specific AI workloads are the availability of chips (let's say GPUs for now).

And here is a new view I am sharing with you: The next crucial battle between cloud providers will be around their custom silicon development.

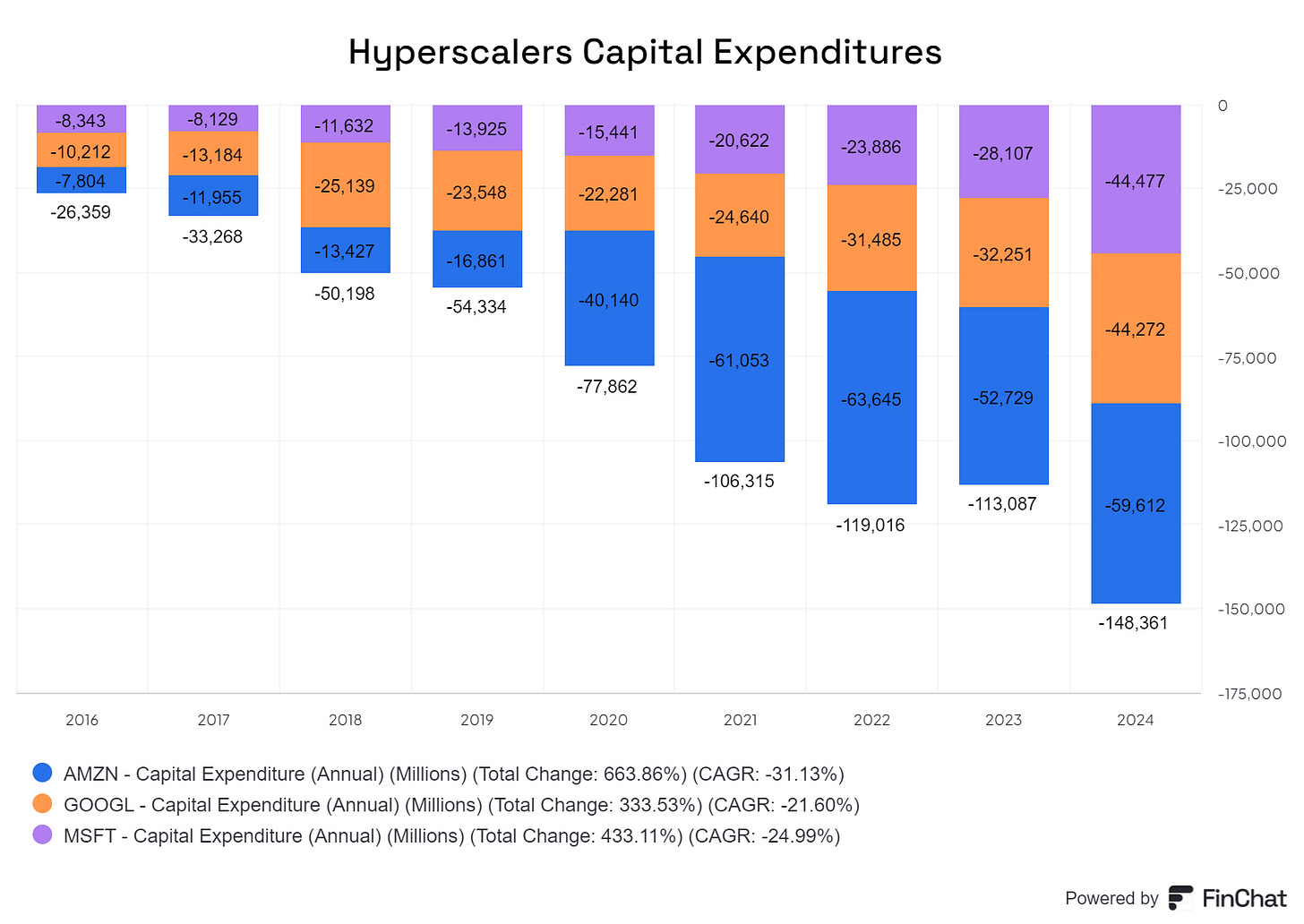

Each hyperscaler, Amazon, Microsoft, and Google, is now seriously upping its CapEx and investing heavily in new data centers, primarily AI data centers.

The reason for that is that nobody wants to be left out in not having enough capacity (GPUs or custom ASICs) to be able to fill the growing demand from clients for AI workloads. But this is a hard ask because right now, the only real chips that clients want for LLM training are Nvidia GPUs. It is a big pain point for all cloud providers because they are in low supply and extremely expensive. Microsoft is even in a unique position as, by far, their biggest »AI client« is OpenAI, of which they own a big part. On the one hand, they want to feed OpenAI with as many GPUs as possible to continue to lead with the cutting-edge models; on the other hand, they still want to offer enough GPUs to their other Azure clients and not risk losing those workloads to competitors. One of the biggest news stories, which quite frankly didn't get enough attention, was that OpenAI chose Oracle Cloud Infrastructure (OCI) to support them by providing additional capacity to Microsoft Azure. It is a big hint that Microsoft doesn't have enough capacity right now and that Microsoft also doesn't want to place all of its eggs in the OpenAI basket as it wants to offer GPU capacity to its other Azure clients.

Cloud providers want as many GPUs as they can get, but there are not enough, and they are really expensive, as Nvidia has a monopoly right now.

The solution for all the hyperscalers is the same: build your custom silicon. Each of the hyperscalers is now in the process of doing that. In my view, it is their most important area for the next 10 years. Each hyperscaler is in the process of chip build-out, but there are quite significant differences in the maturities of their offerings.

Google is the one that has the most mature offering of its TPUs, as it is already in the sixth generation of TPUs. The next hyperscaler is Amazon with its Trainium and Inferentia chips (you can guess from the names of what they are used). Amazon has been developing chips since 2016, with the first launch of its Graviton chip in 2018 and its first generation of Tranium chips in 2020. The last one to »enter the custom chip party« is Microsoft with its Maia chips, which was annouced late last year.

As this current AWS employee explains it, generations and years of developing your own chips matter:

»NVIDIA's moat is also because it was a first-mover into this market. For any player to catch up, it would at least take four, five, six generations to start actually competing with their products. Google is the only product I see in the market which is on the sixth generation right now, even after eight years down the line. Probably, TPU V7, V8 around 2026, 2027 would be the one which would be actually competing really well with the NVIDIA chips.«

source: Alphasense

Another hurdle the hyperscalers will have to overcome is Nvidia's CUDA. The problem is that most hardware engineers have been learning CUDA since school. The problem is that even if you build out very successful custom silicon, offering that to end-cloud clients is not simple, as their engineers don't know the optimization software base. It took Nvidia years for CUDA to become the standard. The hyperscalers don't have time to wait that long, so I sense their strategy will be to build in-house services and offerings that already take the core of their chip software optimization and offer clients a more fitted end solutions. The success of this strategy will largely depend on the complexity and flexibility demands from cloud clients. Working with partners who help clients integrate these solutions and striking deals with LLM providers are already moves that help bolster this strategy.

The 4th to enter the »hyperscaler« world

Another thing that came to my attention during this last year was Oracle. Oracle is the fourth hyperscaler and will be an important cloud provider in the future. Oracle Cloud Infrastructure launched in 2016, it was very late to the cloud market, and I believe it is now positioned well to increase its market share.

Oracle is also not new to chip design. In 2009, they bought Sun Microsystems for $7.4B. Most know Sun Microsystems for creating Java programming language, but they also developed their own chips. So, Oracle has had an internal hardware design unit under its umbrella for quite some time.

There are a few reasons, and I won't explain them in detail here as I plan to do a separate piece on Oracle, but here are some points I want to highlight today:

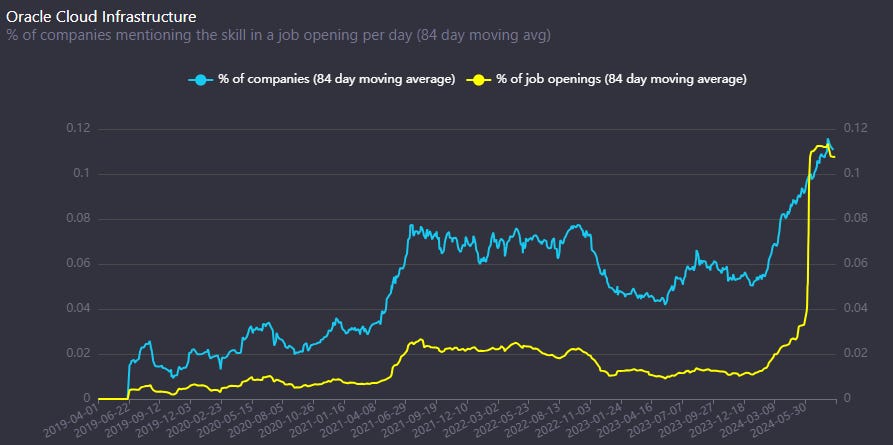

With GenAI, companies will need to use their proprietary data to fine-tune models. Many older enterprises have the most valuable data stored in an Oracle Database. Often, it is still on-prem. A big push exists to migrate that data and workloads to the cloud to acess GPUs. For GenAI, because the database is so important, having a cloud solution that is well-integrated with your database is a big benefit; hence, many companies are now choosing OCI for AI workloads. The number of job openings mentioning OCI (Oracle Cloud Infrastructure) as a needed skill is on a steep rise:

Because Nvidia doesn't want all end-customers to depend on AWS, Azure, and GCP (as they are all trying to develop their own AI chips), it is selling many of its GPUs to Oracle. This means that Oracle can benefit from the current shortage of GPUs and offer clients the compute they need.

Oracle’s data center infrastructure is newer than that of other cloud providers. This is a benefit, as GPUs are not enough to transform traditional data centers into AI data centers. You need the supporting infrastructure to be up to date to really have a shot at transforming the data center. In the era where everyone is building new data centers at warp speed, having the ability to transform your older ones to AI data centers is an additional benefit, again increasing your overall GenAI capacity.

Before you continue reading this article, I would like to invite you to my upcoming webinar on Wednesday at 12 p.m. ET. We are going to talk about Amazon, from AWS to e-commerce, ad business, and their new business lines. It will be really insightful, with many former employee views and my guest speaker, Barry Schwartz, the Chief Investment Officer of Baskin Wealth Management, where they manage more than $2.4 billion in client assets.

It is completely free. You can sign up here:

You can also ask questions at the end of the webinar. If you can’t make it, make sure to still sign up to receive the recordings after the webinar.

PS: if you don’t have a work email or for some reason the sign-up link doesn’t work just write to me at uncoveralpha@substack.com and I will add you manually to the participant list.

The LLM providers

In the last 12 months, there have been a lot of LLM developments. At some point, it felt like we got a new LLM model, becoming the »new best model« every week. For at least the 2nd part of 2023, there was also an obvious strategy from OpenAI. Whenever some of their competitor, Google, Meta, etc., came out with a new model or feature that would »take the internet« and attention, they would, in a week, release their new model or feature that would retake the attention. Sam Altman knows this strategy well, having worked with startups on the importance of keeping momentum and attention. However, that strategy required OpenAI to constantly have better and newer features ready to shock the world. The problem with that strategy is that you have to innovate and build much faster than your competitors for a long time. And from what I saw in the last 6 months, the gap that was once huge between OpenAI and its competitors has largely been closed. Don't get me wrong, OpenAI still has a significant advantage from being the first mover, and that is brand power. ChatGPT has become a mainstream word. But the technological gap seems to have closed. If OpenAI doesn't come with a significant breakthrough model or feature by the end of this year, that will be a problem for them.

And it's not just Google that they are facing today. The dangerous ones have become Anthropic, which also has a partnership with AWS and GCP, and Meta, with its open-source LLama.

I believe Meta is the most dangerous one because, with their open-source strategy, they are destroying all the moats in the industry. Meta is also dangerous because Mark has, in the last 12 months, turned most of his attention to GenAI and is putting an enormous amount of resources into having the best LLM model. From computing (having a great relationship with Nvidia) to engineering talent to having one of the most extensive training databases in the world with Meta's Family of Apps.

It is scary for any competitor to see how fast Meta moves when management aligns and laser focuses on a segment. This is a Former Meta engineer working at the GenAI unit:

»They quickly moved teams. I'll give you one concrete example. When I joined the GenAI org, it was seven people. Literally, it was a team of seven people in January 2023. After three weeks, we became 200 or even 300 organizations. The company realized that there was a quick shift of bringing out teams from different organizations, merging them and creating a new organization specific for GenAI. When I left, it was at least around 600 people. I would guess it's even more. I'm saying the growth and the mobility is quite evident at Meta«

source: Alphasense

That number has probably increased substantially since the 600 people was in December 2023 —it has since been 8 months. Just for comparison, OpenAI is estimated to have around 2500 employees by June 2024. So, we are talking about Meta having an internal GenAI unit probably the same size as OpenAI.

What is even more problematic for competitors is that because of its open-source strategy they are attracting top tier AI talent to join then:

» One of the remarks I made while I was Meta for the last four years is that after the first two years, I felt a lot of influential AI talent was actually leaving the company. I felt that the replacement wasn't really clear. We didn't really hire people who are replacing those people who are leaving. Now, I can say for certain that after the Llama move, a lot of great AI talents joined Meta, which means that the strategy, which is very key, you do not want to underestimate.. People are seriously considering Meta because they're open-sourcing.”

source: Alphasense

To summarize, the speed of LLM commoditization is even faster than I expected 12 months ago and it’s being driven by Meta.

Is Nvidia in a league of its own?

While I won't go into detail about Nvidia and its dominance, as I did already in the last piece, I will note that looking at the past 12 months, Nvidia continues to have a strong moat and position, especially when it comes to AI training. If no big breakthrough happens with any of its competitors, I continue to believe they will dominate for at least the next 2-3 years (talking about business fundamentals, not stock price). However, what is important to note is that we are still in the phase where AI training is taking up the bulk of the workload. Once that shifts to inference, there are more alternatives out there from hyperscaler custom chips to newer companies like Groq etc. Inference by itself is a far less complicated task than training, so that is why the cost side will be a big factor in clients' decisions. One should also note that the inference market should take off soon, as if it doesn't, that means that AI workloads are not going into production and that clients are stuck in the testing phase, which should be bad for the whole AI market if that were to happen.

Inference should be a much bigger market than training, and I think Nvidia won’t dominate it. I believe Nvidia will have its fair share of the market, but it won't be a monopolistic market share as they have in training workloads.

What has gotten even more clear to me in this period is that Taiwan Semiconductor is a crucial company for everything. If we are talking about data center GPUs, custom ASIC chips from hyperscalers, or even computing on the edge (phones, laptops, etc.). Taiwan Semi is where companies go to produce the chips if they want high-performance new technology chips. I often wonder what the multiple of this company would be if it weren't for the geopolitical risks.

Social media is the biggest consumer of GenAI and the first to benefit.

From the use cases I am seeing right now regarding GenAI, I see that social media will be one of the biggest consumers of this technology. In five years, GenAI will generate a lot of content on social media. Unfortunately, human content creators will have a more challenging time as we will have such an abundance of content that attention will be even harder to get. Organic marketing will become more difficult as more value will shift towards first-party data with newsletters and other mediums where you are not dependent on the social media algorithm but have independent direct contact with your audience.

However, this is positive for social media companies as it means that content will become cheaper, and their recommendation engines will have an abundance of choices. They will be able to choose the content for each individual to keep them as engaged as possible on the platforms.

However, social media will be a massive consumer of GenAI not just because of the production of content but also because of its help in producing ad creatives. Hyperpersonalized ads with real-time ad creative flexibility will be the reality, and social platforms will have to offer that functionality on their ad managers.

AI agents are also opening up a new revenue stream for the industry. Both businesses and influencers will be able to build AI-like personas that can help users have conversations via chat. For e-commerce, this can be a big new trend but also a significant disruption to the $500B call center industry, not to mention in-house customer support services, etc. With this move social media opens up its revenue to include not just marketing budgets but also sales budgets.

So far, Meta seems best positioned for it with its most advanced offering, but over time, even smaller platforms will start to see the benefits.

Social media is also one of the industries that will see benefits in revenue and profits from GenAI the fastest. AI ad creative, recommendations, and targeting affect two of the most important metrics for revenue generation for social media: time spent and effectiveness of ad targeting. It will create more ad inventory space (with users being more addicted to the content) and higher CPMs, with ads being more effective because of hyper-personalized ad creative. Combining those two factors, without even the AI agent's revenues, it is a massive tailwind for the industry. Because social media typically operates as an auction model, the effects of that will be transmitted down the sector faster than usual.

Are the big guys the only ones who can play this AI game?

So far, the GenAI field has proven to be mostly the game of the Tech Titans, primarily because of the enormous costs of building the infrastructure to train and run these models. Big Tech has also found an effective way to bypass antitrust issues with their M&A by buying out teams of smaller companies rather than the company itself. This eliminates a potential competitor down the line and gains talent for internal development (Microsoft -Mistral only one example).

GenAI's foundation layer is reserved for the big guys, and VC money spent here will be lost.

It is a bit different on the GenAI application layer. At this point, GenAI's application layer is still too fuzzy, so even for startups on this layer, it is highly questionable what features, and values they can provide that are actually defensible against the guys building the foundation layer. The key defendable advantages remain data and distribution, which a startup rarely has.

However, some smaller public companies have found a hole in providing services to customers with their GenAI needs. Companies such as Databricks, Palantir, and even Snowflake to some extend (although they have their own AI problems). The key for them here is their access to data, especially if clients that are multi-cloud and want a solution across the board. Another thing they can bank on is their relationships with clients, as right now, most clients want to do something with GenAI because everyone is asking the CEOs what they are doing about it, but they are confused and don't know what to do. So consulting services and helping clients build out use cases from the ground up are things they are willing to pay and are in high demand.

Databricks, in particular, is accelerating very fast according to data like job postings mentioning Databricks as a needed skill:

Despite my view that startups have a really low chance of success, I am monitoring a few of them: Perplexity, Groq, and Figure AI which all look promising.

Conclusion

For investors in this field, the initial hype is behind us, and companies will have to show actual results to justify some expectations, or we will have a period of consolidations ahead of us. There are some hardware plays like liquid cooling companies and other areas where the demand is high right now, but for me personally, I want to avoid going into that space at a high valuation. The reason is that software and platforms are sticky. Even when the hype cools down, the consumption stays, while hardware is often cyclical, and with many companies, the long-term margins are low for a reason, as competition can move in fast.

The industry's focus will also move to companies with access to proprietary nonpublic data that can fine-tune and distill LLMs into SLMs that work for their specific use cases (think ERP/DMS providers and other data rich companies)

In general, I think without a major breakthrough by the end of the year, we are coming to a period where the hype will wane down to some extent, and things will normalize more. GenAI is here to stay and has helped boost major resources to the development of AI in general. In the coming years, we will see many new technological breakthroughs in areas like robotics, memory, AI assistants, AVs, and more. AI will be as impactful, if not more, as the internet, but for an investor, I think there will be many opportunities down the road as the initial hype cycle is behind us.

I hope you find it useful.

As always, If you liked the article and found it informative, I would appreciate sharing it.

Also don’t forget to sign up for my upcoming Amazon webinar.

Until next time.

Disclaimer:

I own Meta (META), Oracle (ORCL) and Amazon (AMZN) stock.

Nothing contained in this website and newsletter should be understood as investment or financial advice. All investment strategies and investments involve the risk of loss. Past performance does not guarantee future results. Everything written and expressed in this newsletter is only the writer's opinion and should not be considered investment advice. Before investing in anything, know your risk profile and if needed, consult a professional. Nothing on this site should ever be considered advice, research, or an invitation to buy or sell any securities.

Thanks a lot for the great writeup!

I'm still skeptical of inference vs training changing much at-least in the next few years. I agree at steady state that inference should be vastly greater than training, but we are in early experimental phase - there is going to be a lot of appetite to keep hammering on new paths - we've barely see the multi model thing take off. We haven't any major player try video generation yet - just a few smaller participants like runway.

Richard, a very useful article. nice work