Google is doing better than expected & why investors are getting overly pessimistic

Hi everyone,

I wrote many articles on Google and shared my thoughts about it. From the innovator dilemma they are facing, which I still believe is true a year ago (link to article), to the recent one where I made the case that investors should not fear even a breakup of the company as the sum of parts is actually more valuable than the market cap of the company. At that time, I restarted my position in Google, and recently, with the market turmoil, I have increased that even more. I decided to share some findings that have led to my decision to increase my Google position and give me more confidence:

Search might have a longer run rate

GCP looks like the best-positioned cloud right now

Waymo breakthrough might be sooner than we think

Google's culture and pace of delivering products is starting to change

New revenue streams from AI are beginning to become more clear

Valuation gives enough margin of error

Let's start with Search.

Search in the new AI age

I still believe that LLM Search will disrupt traditional Search in the future. With the disruption of traditional Search, my main fear points for Google were :

Firstly that they would not accept that they face the innovator’s dilemma and would try to push traditional Search longer than it makes sense.

That the new LLM Search is long-term, not monetizable in the same high clip that traditional Search is.

It goes from a Search monopoly that Google has built to many competitors offering their versions of LLM Search and, with it, causing the market to diversify.

It has been more than a year since I first stated these fears, and we have gotten some data to help us determine the significance of them.

First, let's discuss Google facing the innovator's dilemma. To give Google credit, they realize they are facing it, and as it seems today, they are willing to cannibalize the traditional Search product for the new LLM Search. Just a few days ago, Google's CFO Anat said the following at the Morgan Stanley Technology Conference:

An analyst: "There is this discussion about the innovator's dilemma. Alphabet won't change search fast enough because they don't want to disrupt themselves. How do you think about that internally and you see these great things in the lab and you say, wow, this would be great to consumers.«

Google CFO: »So I have -- I always talk about self-disruption. This is just my -- I like to talk about this. And I think you should always look to disrupt your own innovation and not wait for someone else to do it, and that's the thinking that we have.«

And to their credit, on their last earnings call, Sundar mentioned that 2025 will be an important year for Search in terms of many new innovations coming to Search. In fact, from their last moves, we can already see that they have taken the path of cannibalization. Google released their stand-alone Gemini app for iOS at the end of last year, and they are rolling out AI overviews (LLM-type answers on the top of the search results when users google something) quite aggressively throughout different countries in their traditional Search.

The stickiness of Search as a product is also helping Google extend the lifespan of Search despite different Search alternatives popping up every day. A vital metric shared in Google's latest earnings results for me is the following statement from Sundar:

»We are continuing to see growth in Search on a year-on-year basis in terms of overall usage. Of course, within that, AI Overviews has seen stronger growth, particularly across all segments of users, including younger users, so it's being well received.«

It is essential because Sundar addressed Search growth in terms of overall usage and not revenue, which are two different things. On top of it, introducing AI overviews inside Search is causing users to ask more queries and have the same relevant search experience as before. The cannibalization of Search has already started, and I expect that as Google rolls out more changes to Search this year, it will only accelerate.

Remember, the worst thing for a company facing an innovator's dilemma is to deny it. The best thing to do is accept it and cannibalize your cash cow, which Google is starting to do.

Monetization of »New Search«

Moving to monetization, I want to highlight a few more things that emerged in the last 12 months. The prevailing business model for LLM companies to monetize their offering so far is with subscriptions for higher tier products, either a more capable model, fewer limitations, or more tokens. No Google competitor has yet strongly pushed toward monetizing LLMs via ads. The obvious reason for this is that a year ago, the LLM market looked much different, with OpenAI having a strong lead above all others and then Google being the number second, but with quite a gap. Today, multiple companies offer very similar performance-wise models, from OpenAI, Google, Anthropic, and Meta to now even Chinese companies like DeepSeek and Alibaba.

What has changed in this highly competitive market is that pushing toward ads can negatively affect the user experience. Hence, nobody wants to do that because they are all fighting for users and scaling their products. Google is no exception to this, as they mentioned on their last earnings call regarding the monetization of Gemini:

»On the monetization side, obviously, for now, we are focused on a free tier and subscriptions. But obviously, as you've seen in Google over time, we always want to lead with user experience. And we do have very good ideas for native ad concepts, but you'll see us lead with the user experience. And -- but I do think we're always committed to making the products work and reach billions of users at scale. And advertising has been a great aspect of that strategy. And so just like you've seen with YouTube, we'll give people options over time.«

Another important thing that Google did mention on the call was the comment regarding the monetization of AI overviews:

»And as I talked about before, for the AI Overviews, overall, we actually see monetization at approximately the same rate, which I think really gives us a strong base on which we can innovate even more.«

And we got even more details from the CFO at the Morgan Stanley Tech Conference:

»and I think we've shared this before, we're monetizing search on -- with AI Overviews at the same rate as non-AI Overview search, which is great from a monetization perspective. AI Overview where you get -- it's more targeted now and what you're going to be served with in terms of advertisement. So it's beneficial for advertisers, it's beneficial to our users, beneficial for us as well. «

This means that while you are getting less ad inventory in AI overviews because they are more targeted, the CPMs on them are much higher, so advertisers are paying higher prices for these ads because the conversion rates are also higher. If this trend continues, it will be a dream scenario for Google.

Because no other competitor is leaning strongly towards an LLM experience where ads are the monetization path, as nobody wants to hinder their user experience, advertisers also don't have any other place to go but Google for search ads.

From what I have seen so far, the LLM search market will evolve similarly to Netflix and the streaming market. You push for the subscription model as long as you continue to grow and you can make price hikes without significant churn. Once that level is reached, you switch to an ad-tier version.

Another consequence of more users using LLMs or AI overviews over the months is reports from many website owners that they see less organic traffic. While this seems like a problem for Google, it is, in the short term, helping them. Advertisers must lean more toward paid advertising campaigns to reach the same amount of traffic as before because organic is weakening, which helps lift ad prices for search and AI overview ads.

So my conclusion around monetization is that, firstly, it is great for Google that they are seeing similar monetization levels on AI overviews as on non-AI overview searches. Secondly, because the industry is adopting the subscription model and no »new Search« ad inventory is coming online, advertisers are being pushed to use more of Google's paid ads. This gives Google some much-needed time for their LLM Search to quietly cannibalize their traditional Search in a more gradual fashion.

More competitors are popping up, and Google is not a monopoly anymore

My last point of fear regarding the Search disruption was that because of easy access and even open-source LLMs, you will have the basic need to answer a question available in tons of apps and products. And this fear, at least for now, is still as strong as it was back then as it has become clear to me that you will have almost every product and app offering you the solution to answering a question, which was previously served mainly by only one company – Google. Now, if you open any app on Meta, you have Meta AI and LLMs with Copilot in all of Microsoft products, Salesforce, and many others. So, this point still stands that Google's market has gone from a monopoly to a fully diversified market where answering a question in a quality matter is not the moat anymore.

My conclusion when it comes to Search, on two of my three concerns, is that the risks have decreased, and with it, Google's Search business has become more valuable than it was one year ago when many of these questions/concerns had no clear answers. The cannibalization of traditional Search to LLM search might have a more gradual and soft transition than a hard landing; at the same time, in terms of monetary value, the Search part itself will probably be less valuable than the traditional Search was, but given the valuation levels (which we will come to in the later segment) the market is already discounting that risk in my view.

GCP is in a prime position

For me, the most important piece regarding Google today is not Search or YouTube. It is its cloud business, GCP.

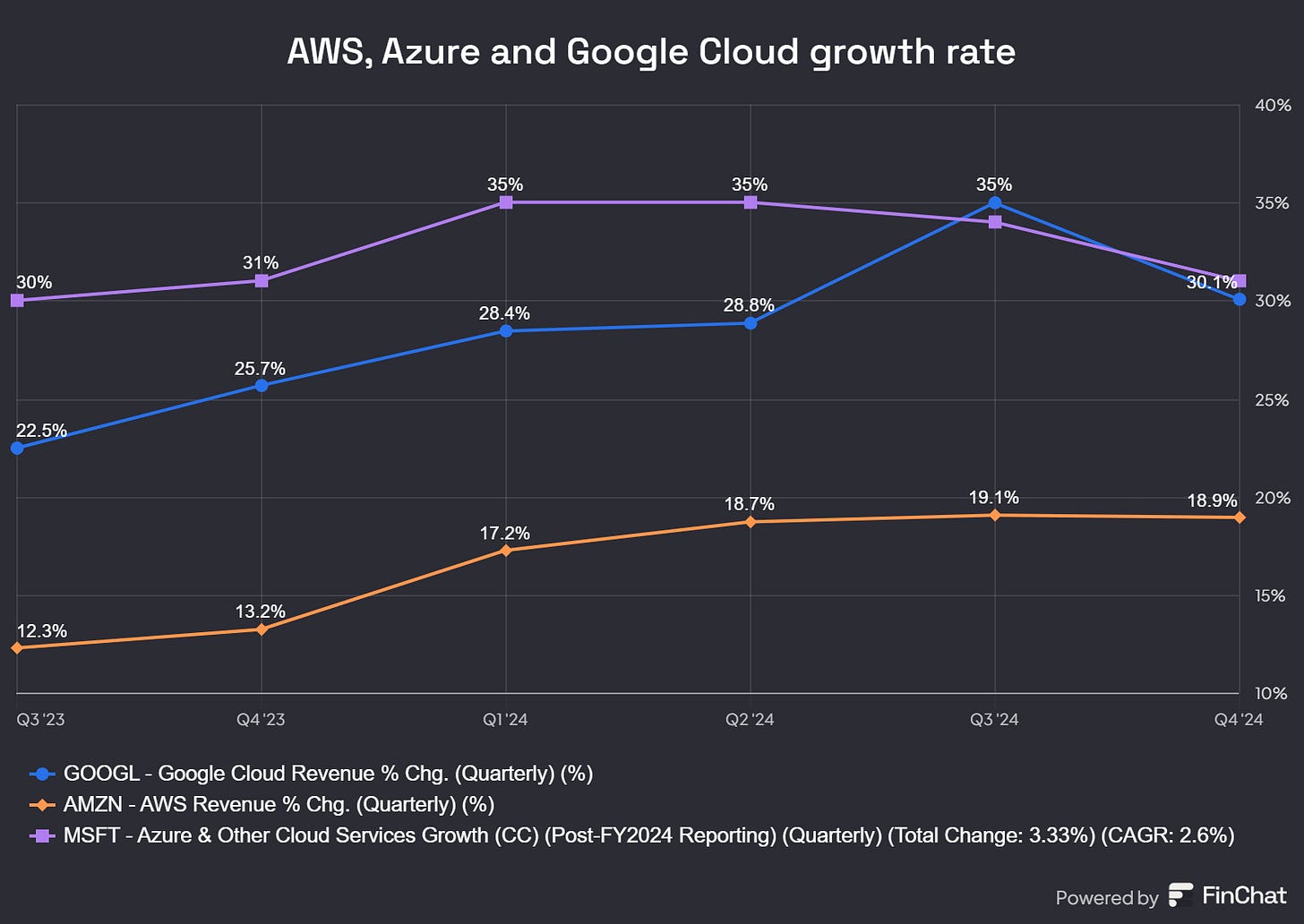

Here are the numbers that the cloud providers reported regarding their cloud business in terms of growth:

We can see that they all reported a slight deacceleration from the previous quarter while still growing strongly. Now, while Google reported the biggest de-acceleration from the hyperscalers, it is important to note that Google Cloud as a segment is not just GCP it is also Google's Gsuite revenue and their other cloud products, so the vital thing to add context here is the comment made on the earnings call:

»Once again, GCP grew at a rate that was much higher than cloud overall.«

With this comment, it's safe to assume that GCP is growing more in the 35-40% range than the 30.1% reported for the whole Google Cloud segment. This makes it by far the fastest-growing hyperscaler. While Google did mention similar comments when it comes to their past earnings calls regarding GCP growth, looking at those comments, this is the first time that the wording »much higher« is mentioned. In the past, it was just the wording »higher, «potentially signaling that GCP acceleration in this quarter is even more significant.

Another essential thing to understand from these numbers is that Google mentioned two things regarding their YoY growth. The first thing is that they faced a tough comp from Q4 2023 which we can see from the chart above that Google Cloud acceleration from Q3 2023 to Q4 2023 was the biggest from the cloud providers as they had a strong AI deployment in Q4 2024, and the second thing limiting their growth, which they mentioned is the lack of capacity to service clients:

»we had a very strong demand from customers, and we didn't have the capacity to match that demand. In fact, I looked at our list of customers because I wanted to make sure it's real and who are you going to serve when, long list of customers that we're going to be able to get to, but we ended the year with more demand than supply.

we're seeing -- and this is an important thing, growth both within our existing customer base and by adding new customers.«

Lack of capacity is something that Microsoft and Amazon also mentioned, as they don't have enough AI compute to service their clients, but I think this point is very important for Google and GCP.

Because there is a lack of capacity in the whole industry right now and because no LLM provider has a significant edge over the other (OpenAI has lost that edge, at least when it comes to enterprises), clients' primary point of judgment when it comes to AI workloads are the costs of compute. And the more we go into 2025, the more I think that the vital cost, if we dissect it further, is the cost of inference compute. And here is where GCP's main edge comes into place: its custom AI chips -TPUs.

While I won't go into detail about TPUs as I covered them before in my previous article as a former Google unit head mentioned (source AlphaSense), TPUs are approximately 66% or less expensive to operate compared to GPUs for the latest generation, with previous generations being around 40-45% lower in cost. It is also important to understand that Google is on its sixth generation of TPU, which is far more mature than Amazon's and Microsoft's ASIC units for AI workloads. And with chip development, the number of generations matters as you learn a lot, and it takes a while for them to become effective and yield good returns. As the hyperscaler battle moves towards custom ASICs, Google knows it has the advantage:

»Be it at the period of frontier we mentioned, and I think our full stack approach and our TPU efforts all play give a meaningful advantage. And we plan -- you already see that.

I know you asked about the cost, but it's effectively captured when we price outside, we pass on the differentiation. It's partly why we've been able to bring forward flat models at very attractive value props, which is what is driving developer growth. We've doubled our developers to 4.4 million in just about 6 months. Vertex usage is up 20x last year. And so all of that is a direct result of that approach, and so we'll continue doing that«

Looking at alternative data sources, it seems that GCP is starting to gain momentum.

The number of companies mentioning AWS, Azure, or GCP as a needed skill in their job openings shows that the current market split between the hyperscalers is 40% AWS, 40% Azure, and 20% GCP. But what is even more interesting is looking at data from when ChatGPT was launched (so pre-LLM), and today, we can see that GCP has, on this metric, taken share from both AWS and Azure (despite Microsoft's strong tie with OpenAI), which is something I wouldn't have expected. And even recently, as the talk shifts more towards inference, GCP seems to have started to gain more momentum, with more new job openings mentioning GCP as a needed skill, as this chart shows:

For full context, the bump we see in 2025 for GCP is not seen when looking at the same data for AWS or Azure data.

Google also mentioned some valuable data on the recent call about their growing demand:

»In fact, today, Cloud customers consume more than 8x the compute capacity for training and inferencing compared to 18 months ago.«

And their AI developer platform Vertex AI seems to be growing nicely:

»Our AI developer platform, Vertex AI, saw a 5x increase in customers year-over-year. Vertex usage increased 20x during 2024 with particularly strong developer adoption of Gemini Flash, Gemini 2.0, Imagen 3 and most recently, Veo.«

All of these might be statistical errors or just the fact that Google started with a smaller base, but all of the data and takes that I read and analyzed do give me confidence that GCP's market position won't be smaller in the new AI age. Google investing $75B in CapEx for 2025, with, as they mentioned, about half of that going towards the cloud business, is something which, as a shareholder, I am happy about as I want them to lean in and build out capacity so they can continue to grow in this generational super cycle for the cloud industry.

Before we continue with the article, I would like to invite you to listen to my free webinar, in which I discussed what recent earnings from Google, Microsoft, and Amazon revealed about the future of AI workloads in the cloud and their CapEx.

now back to the article…

Additional things that had an important weight in my decision

A few more things have changed in the last few months. Let's start with the first one. Since December last year, there has been a noticeable change in Google's speed of development and product shipping. In the last few years, I have not been a fan of Google's culture as many people, including company insiders, have seen it as a place where employees come for a good comp package and chill. They have also become more careless with capital allocation with its other bets, and there was a general lack of urgency stemming a lot from the ZIRP era and having a monopoly position in the market. So, the LLM breakthrough was a big shock to the culture, and I was skeptical of how fast Google could turn this ship tanker back into a fast boat. But in December, I saw some positive things. When OpenAI started posting new announcements every day for 10 days leading to Christmas, Google was ready, and it mostly dwarfed OpenAI from a marketing and PR perspective. OpenAI's planned »PR days« took the spotlight with their own product announcements and product shipping. I am also seeing more insiders like Google's CFO at the Morgan Stanley Tech conference a few days ago speaking about this topic, and from many reports out there, there is a sense of urgency back at Google with Sergey Brin back at Google working on AI.

»So certainly having tremendous innovation engine is insufficient if you're not getting it to users quickly and you're not driving adoption. So that whole continuum and that flywheel needs to be -- to work well. And I think you've seen, if you just looked at the announcements we made over the past 3 months, really every month, sometimes every week in certain months, we've announced something, whether it's Gemini 2.0 or some of our video models or agentic or in other parts of the business, Waymo, we're just -- Willow -- keep announcing new innovation. And that's the other piece of my focus is how do we make sure we get that to consumers, enterprises, creators faster and then drive rapid adoption«

Google CFO

Another important thing I am keeping a close eye on when it comes to Google is Waymo. Waymo is starting to gain traction as last year, they served more than 4M passenger trips and are now averaging more than 150,000 trips each week, and they are growing. On top of this, Waymo is expanding to new U.S. cities and even expanding internationally in Japan. In my view, Waymo's best 5 years are in front of them right now, as the technology stack to support AVs is there, and consumer sentiment is starting to favor it.

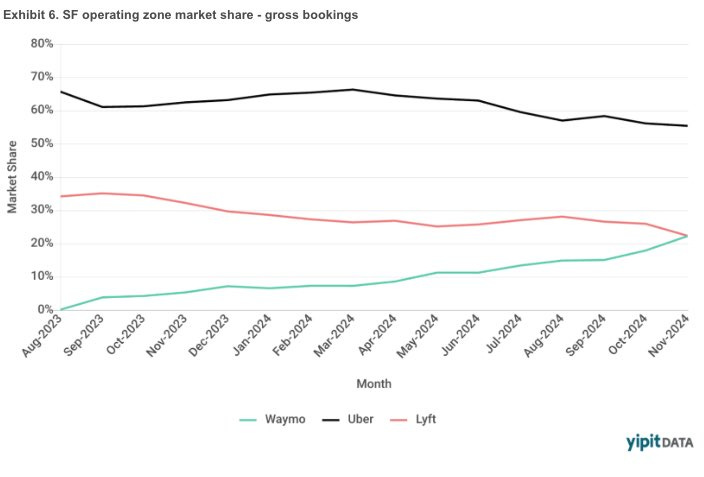

According to YipitData, gross bookings in the SF show that Waymo has caught Lyft and has been growing fast since 2024.

User retention data is also interesting as it might signal that having no driver benefits many users.

Also, reading many expert interviews from industry insiders, a common theme between them is that in terms of technology, Waymo is seen as either the leader or the number 2 but not far behind Tesla when it comes to AVs (should note that most see Waymo as the leader).

The last point before we go to valuation that has significantly increased my conviction in Google is the potential new revenue streams from LLMs and GenAI. While there are many directions one might take with this, the things that I see as exciting in terms of potential are consumer AI assistants and enterprise AI workers.

When it comes to consumer AI assistants, I see a world where each person has their own AI assistant and where that assistant doesn't only do »search like things« for you, but their usage expands deeper. It goes from just helping you gather data to executing tasks on your behalf, whether booking a vacation or solving an insurance claim and many other tasks. In this world, I see consumers willing to pay a monthly subscription fee for having such an assistant, as it saves them time and makes them able to do much more informed decisions. While AI Search might not bring the same amount of revenue as traditional Search has for Google, this new subscription revenue might fill in the missing piece or even expand it.

Similarly, we can see LLM providers going in a direction with enterprises. The big market will be LLM providers offering AI workers to replace specific tasks fully. Tasks like customer service, accounting, marketing, and even low-level coding. There are already rumors that OpenAI is preparing an AI worker, which will cost companies +$2000 per month. This is another revenue stream where companies could see significant TAM expansions, as we are no talking just about software budgets for tech products but are going into labor budgets. With the current pace of development, these companies will be able to develop and custom fine-tune AI workers that can fully replace specific jobs currently in the economy. And since the West is very limited in labor right now, it is something that is wanted in society and not hated, so I expect regulators won't be limiting it, at least in the short term. Within the enterprise AI worker offering, I believe cloud providers and ERP/CRM providers are in the best positions to offer this as they have access to your workflow and data and can, therefore, integrate and custom fine-tune these workers far better than other companies. Again, Google's increased role with GCP should translate to many deals won in the AI worker market, especially with their custom chip cost advantage.

Valuation

Now, moving to the last part, one of the most interesting ones of my thesis is valuation.