Meta’s AI Ambitions: What’s Really Going On

Hi everyone,

Meta just reported its earnings yesterday, showing why AI is the company's core focus. For this article, I focus on two areas: first, why Meta is building its superintelligence group and who these individuals are, and second, what AI does for Meta in terms of current and future revenue and profit opportunities.

Let's dive into it.

Meta's Super Intelligence unit

As most of you have likely heard from various news reports, Meta has been engaged in a massive AI talent poaching spree over the last two months. Many reported that Meta is offering massive compensation packages of over $100M to these talents for them to join Meta's new Superintelligence unit. The reason, bluntly put, is that in the last few months, Meta has fallen behind the frontier AI labs in terms of their AI models and wants to course-correct significantly. The primary reason for falling behind is due to suboptimal training decisions, inadequate post-training strategies, and ineffective leadership. Semianalysis already mentioned some of those mistakes:

Chunked attention – a long-context attention mechanism, Meta chose that introduced blind spots.

Expert choice routing – a Mixture-of-Experts (MoE) training strategy that was altered mid-run.

Pretraining data quality issues – problems with the scale and cleanliness of the training data.

Scaling strategy and coordination – disorganized research experiments and poor leadership decisions.

Underdeveloped Internal evaluation frameworks

Due to these mistakes and the importance of AI in Mark Zuckerberg's eyes, Meta made a significant course correction. On the 12th of June, they announced that they will invest $14.3B in Scale AI for a 49% equity stake. More importantly, Scale AI's CEO, Alexandr Wang, will join Meta in leading its superintelligence unit; they have also taken on some other talented people from Scale AI. Scale AI is an important company in the AI model development ecosystem. They are a data labeling company. Scale AI's clients were both Google and OpenAI. Following the acquisition, Google has reportedly ceased working with Scale AI, and OpenAI is likely also exploring alternative options. But without going into too detail about all of these, the ScaleAI aquihire started all of this, and today, here is the list of the employees and their knowledge from OpenAI, Apple, Google, Anthropic that Meta poached in the 2 month time frame:

Alexandr Wang (Scale AI) - high-profile Silicon Valley entrepreneur, had founded Scale AI in 2016 (after dropping out of MIT) and built it into a premier platform for training data – supplying labeled datasets to firms like OpenAI’s ChatGPT. Unlike many academic AI researchers, Wang is known as an adept business leader who scaled AI services and even advised U.S. policymakers on AI.

Nat Friedman - former GitHub CEO.

Lucas Beyer, Alexander Kolesnikov, Xiaohua Zhai (OpenAI Zurich). All three are renowned specialists in computer vision and large-scale machine learning – in fact, during their prior tenure at Google Brain, they co-authored influential research (such as pioneering work on the Vision Transformer model in 2020). At OpenAI, they helped stand up the Swiss branch focused on cutting-edge model training. All three are considered high-profile in the AI research community for their work advancing computer vision and model scaling techniques.

Shengjia Zhao (OpenAI) – A co-creator of ChatGPT and GPT-4, Zhao had led OpenAI’s synthetic data generation efforts (crucial for training robust models). His work underpinned ChatGPT’s development, making him a high-profile hire. In late July, Zuckerberg announced Zhao would become Chief Scientist of Meta’s Superintelligence Labs.

Jiahui Yu (OpenAI) – An expert in multimodal AI, Yu co-created several of OpenAI’s scaled-down “GPT-4 mini” models and GPT-4.1. He previously led OpenAI’s perception team (working on vision capabilities for models) and later co-led multimodal model development for Google DeepMind’s Gemini project. Yu brings experience in bridging image and language AI.

Shuchao Bi (OpenAI) – A leading engineer in AI voice and multimodal training at OpenAI, Bi was the co-creator of GPT-4’s voice mode and of the smaller “GPT-4o” and “o4-mini” models. He headed OpenAI’s multimodal post-training team, fine-tuning models to handle inputs like speech and images.

Hongyu Ren (OpenAI) – An OpenAI research lead who co-developed the “O-series” internal models (such as GPT-4o and various “mini” GPT-4 prototypes). Ren led a group focused on post-training optimization at OpenAI, refining model reasoning.

Trapit Bansal (OpenAI). Bansal is known for pioneering “RL-on-chain-of-thought”, a technique combining reinforcement learning with chain-of-thought prompting to improve reasoning in AI. He was a co-creator of OpenAI’s “O-series” reasoning models (internal experimental models that contributed to GPT-4’’s development).

Jack Rae (Google DeepMind). Rae was the pre-training tech lead for Google’s upcoming Gemini AI model and led the reasoning efforts for DeepMind’s Gemini 2.5 project. He had also spearheaded earlier LLM projects at DeepMind, serving as a lead on the Gopher and Chinchilla language models that helped establish scaling laws for AI.

Johan Schalkwyk (Google) – A veteran Googler, Schalkwyk is a former Google Fellow (a title for Google’s top engineers) who was an early contributor to Google’s voice-AI initiatives (codenamed “Sesame”) and the technical lead for a conversational AI project known internally as “Maya”. He brings deep expertise in speech recognition and voice-driven AI.

Huiwen Chang (Google Research & OpenAI). Chang is a researcher known for innovations in image generation AI. At Google Research, she invented MaskIT and Muse, two novel text-to-image generation architectures. She also co-developed GPT-4’’s image generation component during a stint collaborating with OpenAI.

Pei Sun (Google DeepMind/Waymo). Pei Sun joins from Google’s ranks as well; he worked on DeepMind’s Gemini project focusing on post-training, coding, and reasoning modules. Earlier, Pei Sun was at Alphabet’s Waymo, where he created the last two generations of Waymo’s perception models for self-driving cars.

Joel Pobar (Anthropic). Pobar is an engineering veteran who moved from OpenAI’s rival Anthropic. At Anthropic, he worked on model inference (optimizing how AI models run.

Ruoming Pang (Apple). Apple’s top executive for AI models. Pang had been the head of Apple’s Foundation Models team, meaning he oversaw development of Apple’s large-scale AI systems that power features like on-device Siri intelligence and multimodal capabilities. A distinguished engineer, Pang was responsible for Apple’s most advanced AI initiatives (and had previously been at Google before Apple hired him in 2021). His defection to Meta came with a massive compensation package – reportedly on the order of $200 million in total value.

Mark Lee and Tom Gunter (Apple), both senior Apple researchers in the foundation models team. At Apple, Lee and Gunter worked under Pang on developing advanced AI features – likely including multimodal AI systems and on-device machine learning capabilities for future Apple products.

Bowen Zhang (Apple). Zhang was instrumental in Apple’s internal efforts to build multimodal AI systems (combining text, vision, etc.) and was part of Pang’s group.

I probably missed some, but these give you a good sense of these people as they will be key for Meta's AI success going forward, as Zuckerberg sees a small team as the vision for going forward with AI:

»In terms of the shape of the effort overall, I guess I've just gotten a little bit more convinced around the ability for small talent-dense teams to be the optimal configuration for driving frontier research. And it's a bit of a different setup than we have on our other world-class machine learning system.So if you look at like what we do in Instagram or Facebook or our ad system, we can very productively have many hundreds or thousands of people basically working on improving those systems, and we have very well-developed systems for kind of individuals to run tests and be able to test a bunch of different things. You don't need every researcher there to have the whole system in their head. But I think for this -- for the leading research on superintelligence, you really want the smallest group that can hold the whole thing in their head, which drives, I think, some of the physics around the team size and how -- and the dynamics around how that works.«

Interesting the CEO of Nvidia was recently asked about the strategy of small teams on the All in Pod and actually agreed with it saying:

»150 AI researchers with enough funding behind them can create an OpenAI«

So the strategy of gathering the top AI talent paying them billions is actually a very good strategy as the small team is an advantage when it comes to frontier AI model developement, this move by Meta not only improved their chances of sucess but also hindered a lot of their competitors as they lost some key AI talent (which gives Meta more time to come back to the frontier). It also helps Meta be faster and more flexible, even with it being a large tech company to compete with more flexible, smaller teams like OpenAI.

But why does Meta want to be the one developing AI so badly that they need this Superinteligence unit? Lets dive into the areas where AI is already helping Meta and how this is what are new opportuntiies for Meta with GenAI.

The effect of AI on Meta

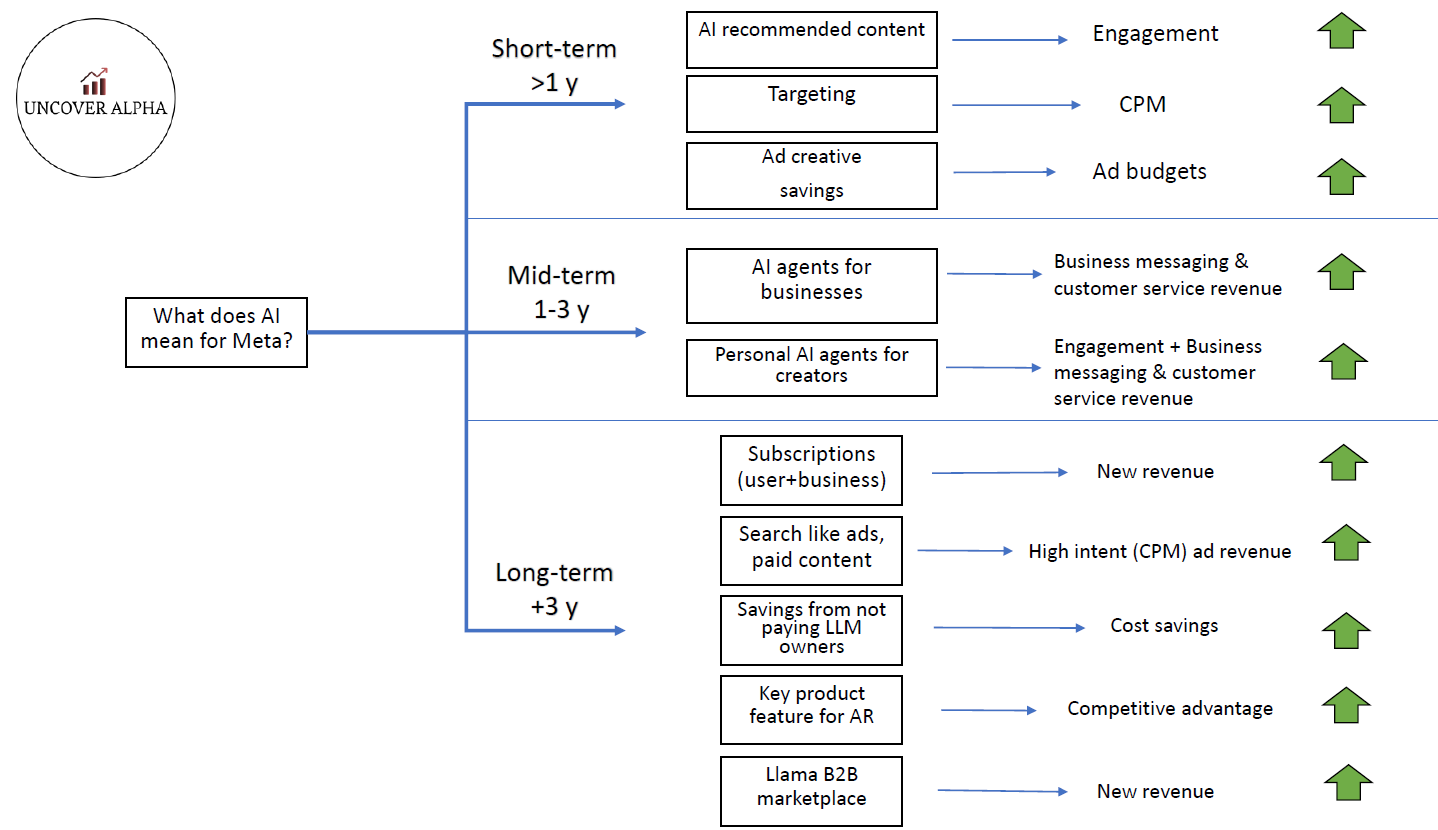

Back in April last year, I published an article and this chart around Meta and AI:

And I have to admit, despite the fast-changing pace of AI, most of the things still stand true today.

But in this earnings call, Mark Zuckerberg framed it into 5 pillars:

1. Improved advertising

2. More engaging experiences

3. Business messaging

4. Meta AI

5. AI devices

6. Cost savings (added by me, not Zuck)

Let's dive into each of these

Improved advertising

Meta in this quarter reported a 22% YoY growth in revenue, which was well above estimates. The company describes a lot of the reasoning behind this as being driven by improvements from advertising unlocked by AI:

»In advertising, the strong performance this quarter is largely thanks to AI unlocking greater efficiency and gains across our ad system. This quarter, we expanded our new AI-powered recommendation model for ads to new surfaces and improved its performance by using more signals and longer context. It's driven roughly 5% more ad conversions on Instagram and 3% on Facebook. «

Meta, given that it is not a high-intent platform like Google Search, should be one of the biggest beneficiaries of AI, as ads that are better targeted, as well as more creative, affect the impulsive buying decisions of people, which helps drive conversions. If advertisers see better conversions on their ads, they increase their ad budgets. A trend that is already happening is that Meta's Andromeda model architecture:

»Impression growth accelerated across all regions due primarily to engagement tailwinds on both Facebook and Instagram and to a lesser extent, ad load optimizations on Facebook. The average price per ad increased 9%, benefiting from increased advertiser demand, largely driven by improved ad performance.«

»The Andromeda model architecture we began introducing in the second half of 2024 powers the ads retrieval stage of our ad system, where we select the few thousand most relevant ads from tens of millions of potential candidates. In Q2, we made enhancements to Andromeda that enabled it to select more relevant and more personalized ads candidates while also expanding coverage to Facebook Reels. These improvements have driven nearly 4% higher conversions on Facebook Mobile Feed and Reels.«

Meta also has a new Generative Ads Recommendation system (GEM) that is showing results:

»Our new Generative Ads Recommendation system, or GEM, powers the ranking stage of our ad system, which is the part of the process after ads retrieval where we determine which ads to show someone from candidates suggested by our retrieval engine. In Q2, we improved the performance of GEM by further scaling our training capacity and adding organic and ads engagement data on Instagram. We also incorporated new advanced sequence modeling techniques that helped us double the length of event sequences we use, enabling our systems to consider a longer history of the content or ads that a person has engaged with in order to provide better ad selections. The combination of these improvements increased ad conversions by approximately 5% on Instagram and 3% on Facebook Feed and Reels in Q2.«

In addition, advertisers with ad creative tools can now